by Shelven Zhou (x: @zhou49) from Phala Network

This research was funded by Succinct, whose support made this evaluation possible.

TL;DR

Using Phala Network's cloud computing platform and SDK, SP1 zkVM can run directly on TEE-enabled GPUs without code modifications, providing enhanced privacy and combined ZK-TEE verifiability.

The TEE overhead primarily comes from memory encryption, making TEE-enabled SP1 more suitable for complex applications (where overhead is amortized), including zkEVMs, rollups, and machine learning, with overall overhead less than 20%.

Currently, TEE GPU capabilities are available only on high-end cards, which offer better performance-to-price ratios. Existing zkVM payloads underutilize the available GPU memory, indicating potential for supporting even more complex applications.

The Promise of Zero-Knowledge Virtual Machines

Zero-knowledge proofs (ZKPs) enable one party to prove the validity of information to others without revealing any underlying data. Zero-Knowledge Virtual Machines (zkVMs) build on this by integrating ZKP algorithms directly into virtual machine instruction sets, automatically compiling programs into provable circuit forms. This innovation significantly lowers the barrier to entry for developers, allowing them to write code in familiar languages without requiring deep cryptographic expertise.

Among the various zkVM implementations available today, SP1 from Succinct stands out as one of the fastest and most reliable options, having undergone rigorous security audits to ensure its robustness.

Current Limitations of zkVMs

Despite their promise, zkVMs face significant challenges. The computational overhead of generating zero-knowledge proofs is substantial, making GPU acceleration essential for practical deployment. This performance requirement creates a fundamental tension in the zkVM ecosystem: users typically need to outsource proof generation to providers with sufficient computational resources.

However, this outsourcing introduces a critical privacy concern. The execution circuit in zkVMs is public, meaning that whoever runs the zkVM can see all the data being processed. This undermines one of the primary benefits of zero-knowledge technology – privacy preservation.

The solution to this paradox lies in combining zkVMs with Trusted Execution Environments (TEEs). By running zkVMs inside TEEs, we can maintain the privacy of the computation while still benefiting from the verifiability of zero-knowledge proofs.

The Emergence of TEE-capable GPUs

The landscape of secure computing changed dramatically in late 2023 with NVIDIA's release of the Hopper series GPUs, the world's first GPUs with built-in TEE capabilities. These GPUs implement hardware-based encryption to protect both the GPU itself and its memory. Working in conjunction with TEE-enabled CPUs, they can generate verifiable attestation reports (quotes) that can be validated by any third party.

Phala Network has been at the forefront of TEE GPU research, publishing a comprehensive evaluation of TEE GPU performance and usability in their paper. We've built a decentralized cloud computing network that supports both TEE CPUs and GPUs, along with the necessary security infrastructure.

Benchmarking SP1v4 inside TEE H200

Experiment Setup

Hardware:

- Physical Machine:

- CPU: 2 × Intel Xeon Platinum 8558, totaling 96 cores with Intel TDX enabled

- Memory: 2TB

- GPUs: 8 × NVIDIA H200 NVL, each with 141GB memory and 4.8 TB/s memory bandwidth, with Confidential Computing (CC) mode enabled

- Virtual Machine Configuration:

- 8 CPU cores

- 32GB RAM

- Single H200 NVL GPU pass-through

Software Environment: We leveraged Phala's dstack SDK, which enabled us to run Docker images within a TEE without any code modifications:

- SDK: the https://github.com/nearai/private-ml-sdk based on Phala's https://github.com/Dstack-TEE/dstack SDK

- Benchmarking code: Used Succinct’s https://github.com/succinctlabs/zkvm-perf with updated dependencies through our pull request

For comparison with AWS hardware performance, we used Succinct's existing SP1 benchmark data.

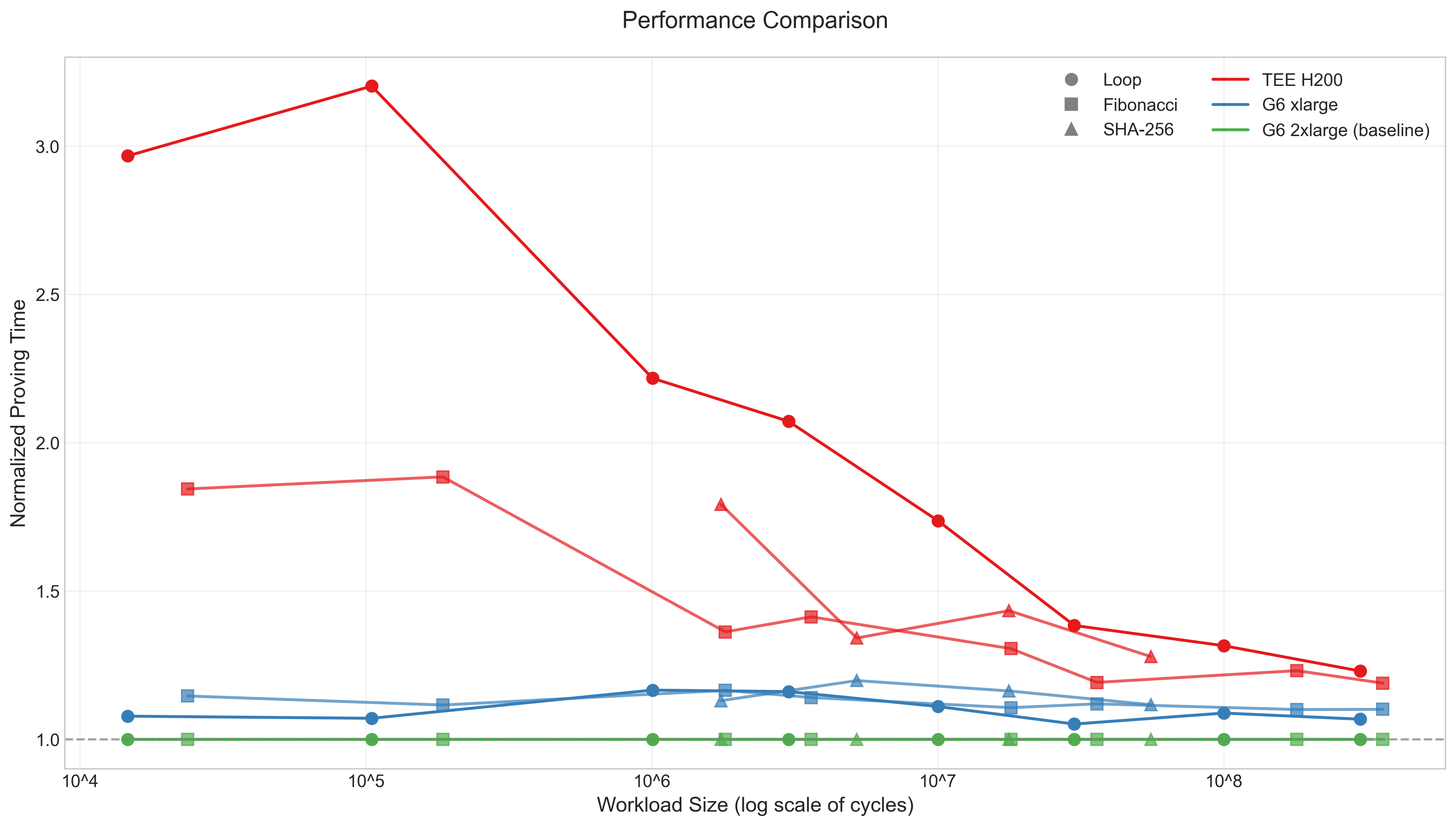

Performance and Overhead Comparisons

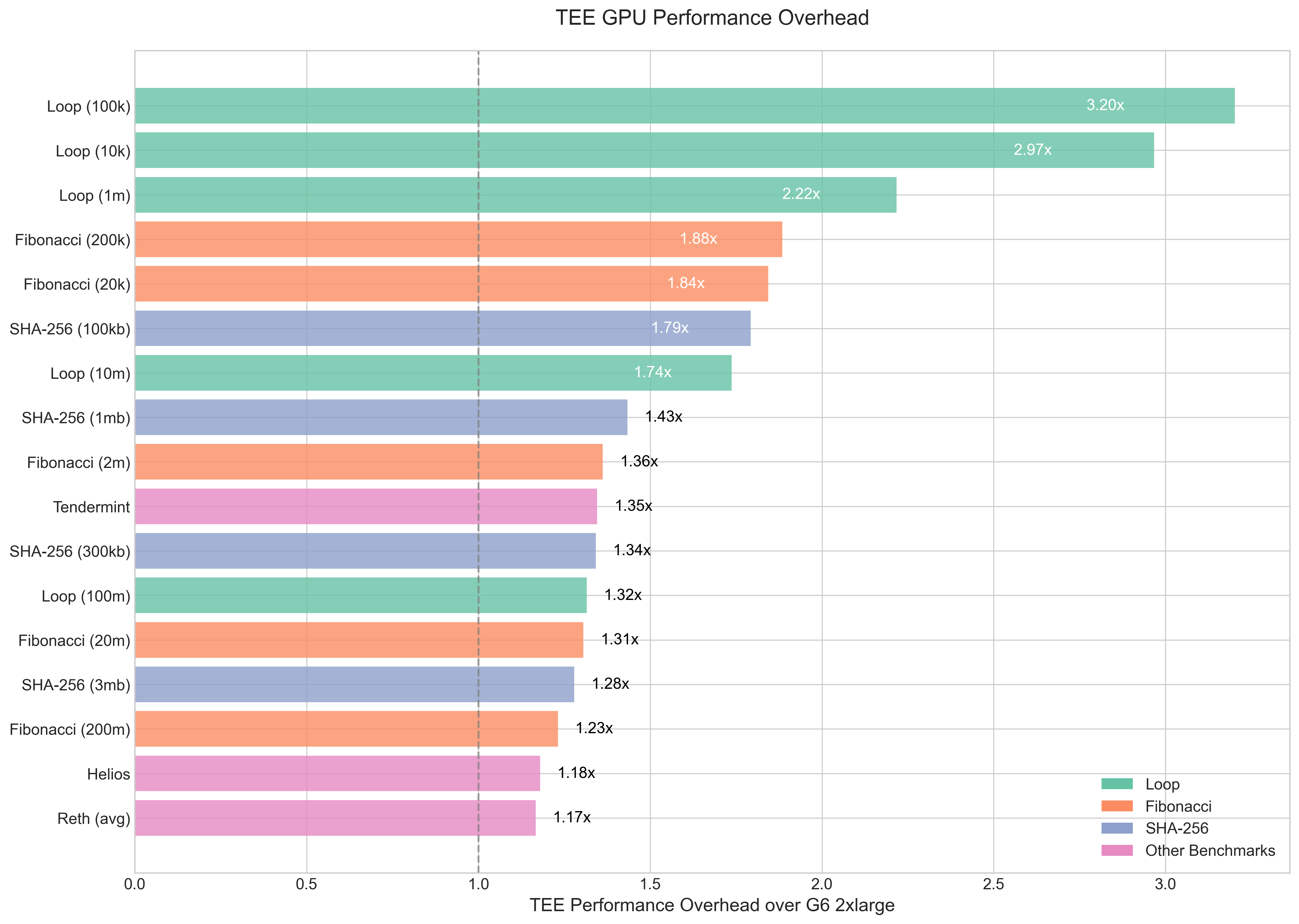

The core contribution of our work is a detailed benchmark of SP1v4 running inside an NVIDIA H200 GPU with TEE capabilities. Our experiments yield a clear conclusion: H200 brings privacy and verifiability to SP1 with low overhead, while providing ample computational power and memory to support larger, more complex computational payloads.

Our comprehensive testing revealed that the TEE overhead gradually converges to less than 20% as task complexity increases. This pattern emerges because the primary overhead from TEE comes from encrypted data transfers between CPU and GPU, which remains relatively constant regardless of workload size. For longer-running tasks, this fixed overhead becomes proportionally smaller. This also means the performance of short-lived simple tasks can be optimized with techniques like pipelining.

We present the full results in this sheet.

Cost and Hardware Considerations

The high-end H200 GPUs offer a compelling value proposition, delivering superior performance and enhanced security features at a cost-efficient price point relative to their capabilities.

When evaluating the practicality of this approach, cost is an important factor. While the H200 represents the high-end of NVIDIA's GPU offerings, the cost-performance ratio remains favorable for many use cases:

| GPU Model | Memory | Memory Bandwidth | Compute Power (INT8) | Hourly Cost |

| H200 NVL | 141GB | 4.8TB/s | 3341 TFLOPS | $2.5/hour |

| L4 (AWS G6) | 24GB | 300GB/s | 485 TFLOPS | $0.805/hour |

The H200 offers approximately 6x the compute power and nearly 6x the memory of the L4, at roughly 3x the cost, making it a cost-effective choice for memory-intensive and computationally demanding zkVM workloads. For many applications, the performance benefits and additional security guarantees justify the increased cost.

Conclusions

The marriage of zkVMs and TEE technology represents a significant step forward in secure, private computation. Our research demonstrates that the TEE overhead is particularly well-suited for complex applications where processing time is longer, as the fixed encryption cost becomes proportionally smaller. This makes TEE-enabled SP1 an excellent fit for resource-intensive applications like zkEVMs, rollups, and advanced machine learning models.

Looking ahead, we anticipate that as zkVM applications grow in complexity, the substantial memory capacity of TEE-enabled H200 GPUs will become increasingly valuable. Current zkVM workloads barely scratch the surface of what these GPUs can handle, suggesting significant room for innovation in developing more sophisticated zero-knowledge applications that can leverage this untapped potential.

This combination of zkVMs running in TEE-enabled GPUs opens up new possibilities for a range of applications:

- Financial services: Process sensitive transaction data with both privacy and verifiability, enabling compliant yet confidential financial applications.

- Healthcare analytics: Analyze private medical data while providing cryptographic guarantees about the correctness of the analysis without exposing the underlying data.

- Confidential machine learning: Train and run inference on private models and data while providing verifiable guarantees about the model outputs.

- Decentralized identity systems: Process identity verification without exposing personal information, with cryptographic guarantees about the verification process.

As both technologies continue to mature, we expect to see increasingly sophisticated applications that leverage the combined strengths of zkVMs and TEE technology, pushing the boundaries of what's possible in secure, private, and verifiable computation.