Windsurf supports Model Context Protocol (MCP) to help AI agents interact with real-world tools. This guide shows you how to configure MCP in Windsurf, with a secure deployment option using Phala Cloud—a TEE-powered hosting service for privacy-first AI tooling.

🧠 What is Windsurf?

Windsurf is an AI-native development environment designed to extend Large Language Models (LLMs) with real-world capabilities. It acts as a bridge between AI and your tools, codebase, and external data—making AI truly interactive and useful in practical workflows.

With MCP integration, Windsurf empowers AI agents to call external services, automate tasks, and fetch live data, all within a secure and customizable setup.

🔌 What is Model Context Protocol (MCP)?

Model Context Protocol (MCP) is an open standard that allows AI models to communicate with external tools and services through a unified interface. Whether it’s fetching data, running scripts, or interacting with APIs, MCP extends AI beyond text generation.

Why MCP?

- Seamless Tool Integration

- Customizable AI Workflows

- Real-Time Data Access

MCP transforms AI from a passive assistant into an active participant in your development process.

What are the benefits for using Windsurf MCP?

Windsurf's MCP integration brings structured, flexible tooling to LLM development—going beyond prompt hacks into fully managed model-context interactions. Here’s what makes it powerful in practice:

1. Unified Tool Protocol

MCP provides a standard interface that lets your AI agents connect to external services, APIs, or compute tools with consistent behavior. Instead of writing special-case logic per task, you describe tool capabilities once—and reuse them anywhere.

2. Flexible Server Configuration

You can easily add multiple MCP servers to Windsurf, using either stdio or SSE (Server-Sent Events) for communication. This makes it easy to experiment with local prototypes or remote deployments without changing your workflow.

3. Support for Custom Tools

By defining MCP endpoints in mcp_config.json, you can plug in your own scripts, databases, or third-party services. This allows developers to build custom AI pipelines where tools feel like first-class model extensions.

4. Interactive Testing and Tool Management

Windsurf’s interface supports live control of tools—you can enable, disable, or switch between MCP backends in real time. This lowers iteration friction when debugging agents or trying out different tool setups.

5. Developer Productivity & Modularity

Instead of writing glue logic or prompt templates, MCP helps you build modular, testable, reusable toolchains around your LLM agents. That’s a huge boost when scaling up from single-agent demos to real-world apps.

Step-by-Step: Set Up MCP in Windsurf

What You Need Before You Start

- Windsurf (latest version)→ Download here

- Node.js & npm→ Install from nodejs.org

- Optional: API keys for tools like GitHub, if required

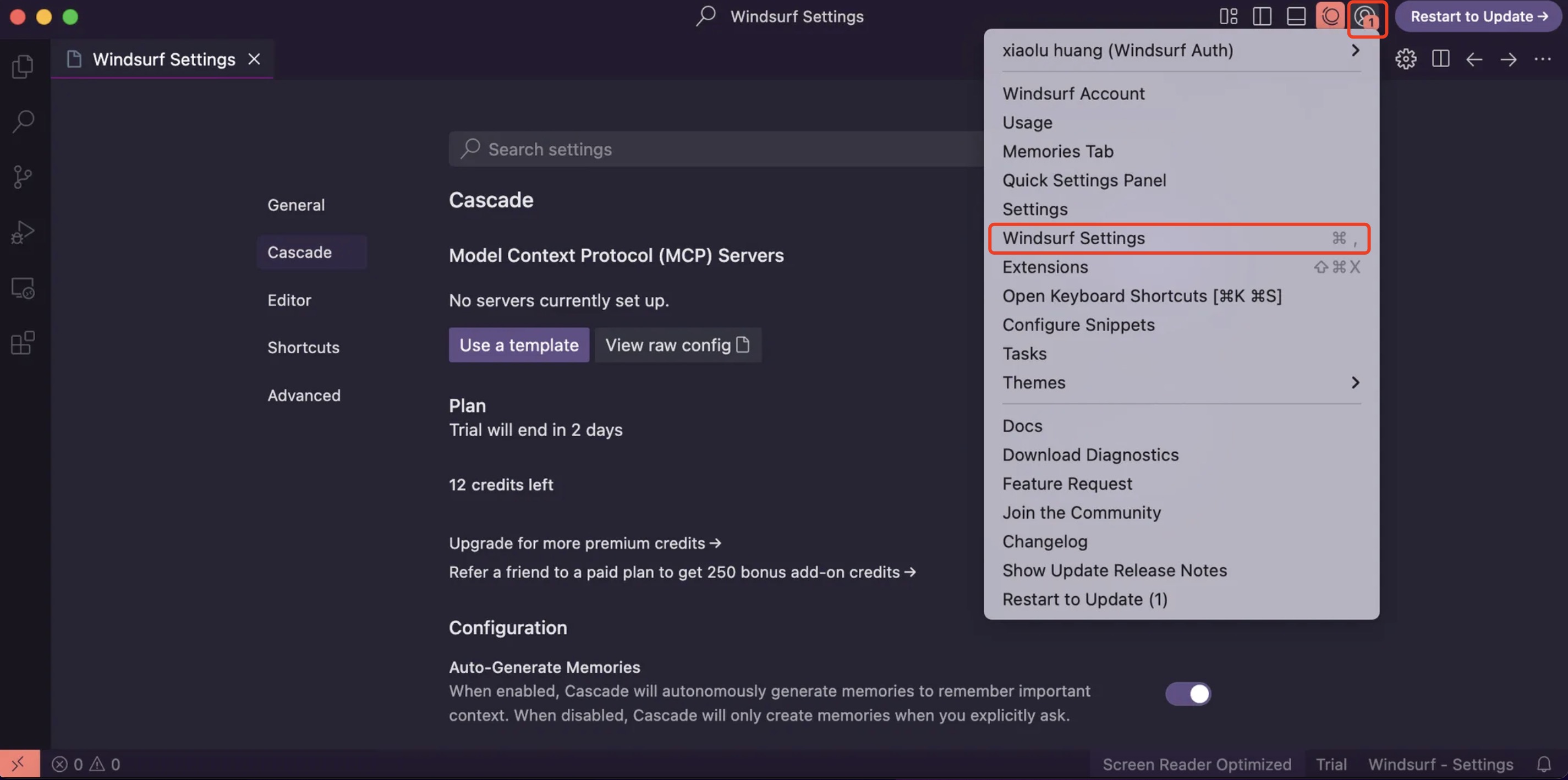

Step 1: Open Settings

- Open Windsurf.

- Open Windsurf Settings Page.

- Go to the Cascade section.

This is where you’ll manage your MCP tools.

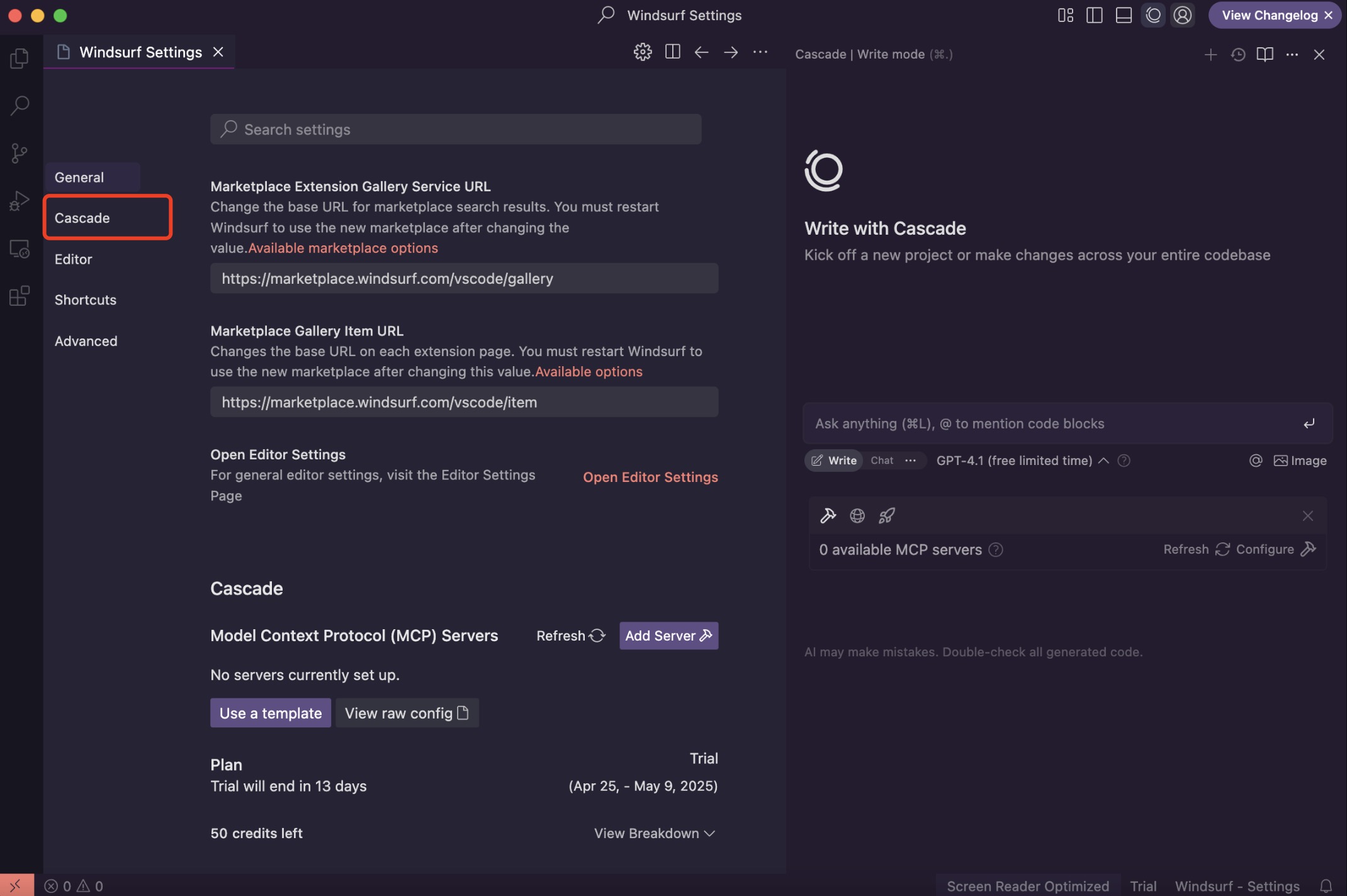

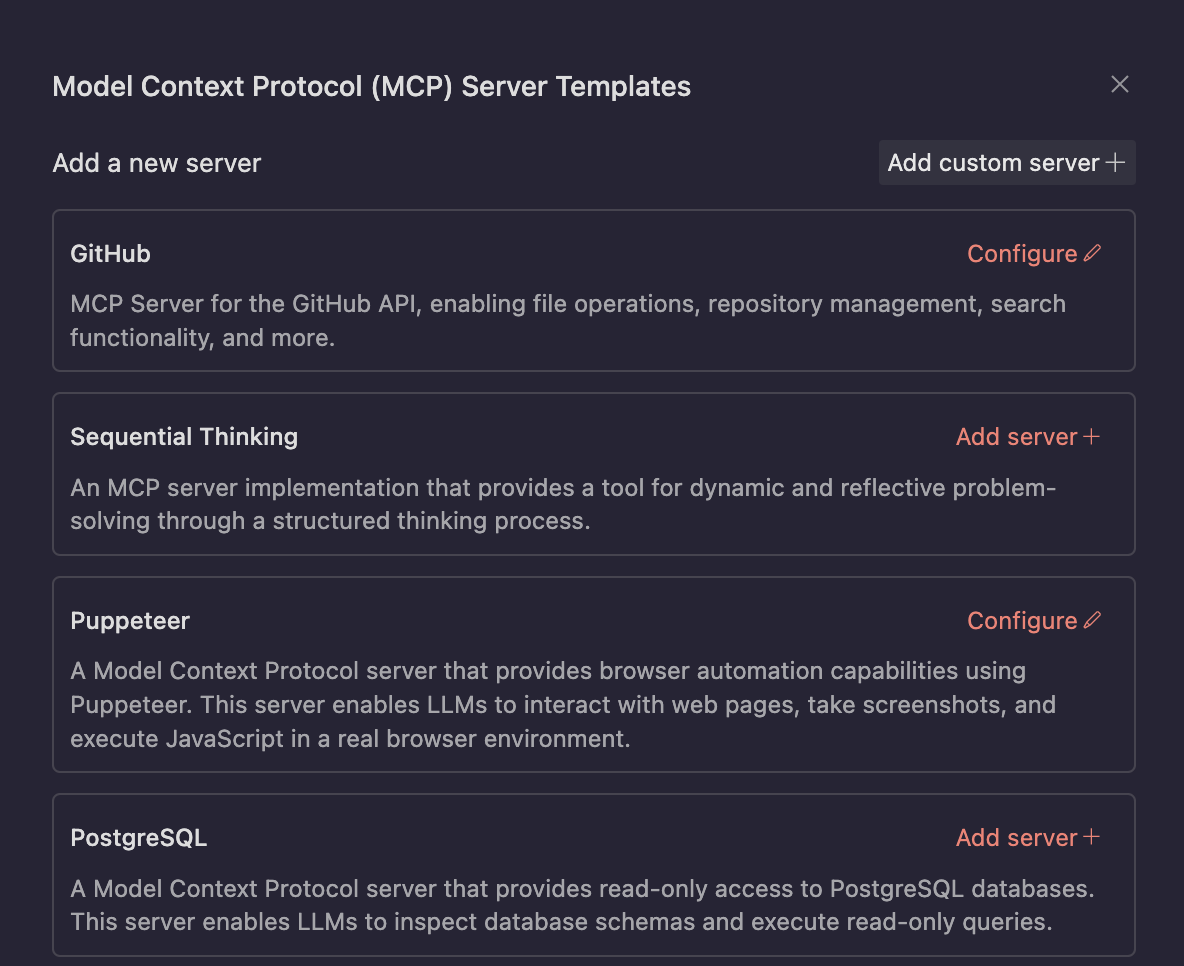

Step 2: Add an MCP Server

Scroll down to MCP Servers.

You have two options:

- Recommended Servers: Easy, pre-configured tools.

- Add Custom Server +: For custom URLs or remote servers.

💡 Tip: If you’re new, try a Recommended Server first to see how it works.

Step 3: Use a Secure Remote MCP (via Phala Cloud)

If you want to use a non-default tool or connect to a remote MCP service, Windsurf supports custom MCP server configuration.

- In the Cascade > MCP Servers panel, click on Add Custom Server +.

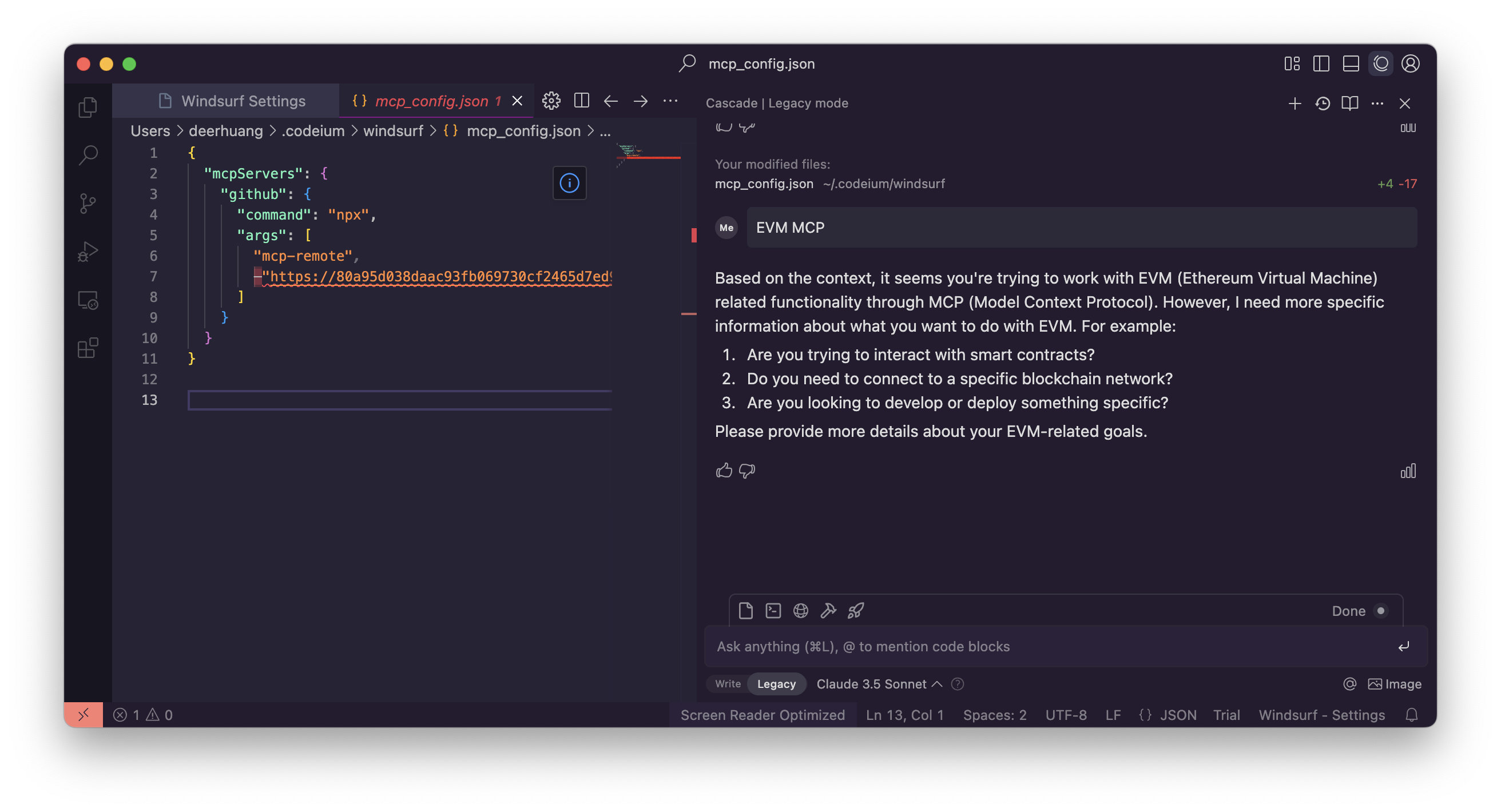

- To connect a Phala-hosted MCP tool (e.g., GitHub integration), use this config format:

json

CopyEdit

{

"mcpServers": {

"github": {

"command": "npx",

"args": [

"mcp-remote",

"https://<your_phala_url>/sse"

]

}

}

}- The

commandisnpx, used to invoke the MCP client. - The first argument,

mcp-remote, tells Windsurf to treat this as a remote server. - The second argument is the SSE endpoint URL of the Phala-hosted MCP server.

You can find a full list of ready-to-use URLs here: 👉 Phala Cloud MCP Hosting

Once added, click Refresh Servers.

📌 Example: I used the EVM MCP in Windsurf (a Model Context Protocol server for interacting with the EVM blockchain, built with the EVM AI Kit by OpenMCP) — and it ran successfully.

💬 How Do I Use These Tools in Windsurf?

Once configured, MCP tools will:

- Be available to the Composer agent automatically.

- Be invoked when your prompt matches a tool’s function.

No extra steps needed. Windsurf handles tool discovery in the background.

🛡️ Why Host MCP in TEE?

MCP servers handle external code and sensitive data. Hosting them on unsecured machines poses risks (data leaks, tampering).

Phala Cloud solves this with:

- ✅ Privacy by default (TEE enclaves encrypt and isolate everything)

- ✅ Verifiable execution (you know what code ran, no surprises)

No hardware setup. No need to trust your server host.

🔐 Secure MCP isn’t a bonus—it’s table stakes.

Finding More TEE-Backed MCP Servers

With Phala, you get privacy, verifiable execution, and scalability—without managing hardware or low-level configurations.

For a list of available TEE-backed MCP servers, visit Phala Cloud MCP Server List and start deploying in a secure environment within minutes.

Summary

| Task | Time Required | Tools Needed |

| Configure MCP locally | 5 minutes | JSON file + Windsurf |

| Use Phala remote server | 1 minute | Copy + paste config |

| Secure your workload | Instant | Phala Cloud (TEE) |