Phala Network and 0G Partner for Enhanced Confidential AI Computing

2024-11-13

Overview

As secure, confidential, and verifiable computing becomes critical in today’s AI and Web3 landscape, Phala Network and 0G have partnered to address these demands. This collaboration is especially impactful for Confidential AI in Large Language Models (LLMs), where data privacy, secure execution, and trust in outputs are essential.

By implementing Phala Network's Trusted Execution Environment (TEE)-based SDK as the first of their verifiable compute providers, 0G's decentralized AI Operating System will benefit from confidential AI inference, local verification and complete protection from third-party interference.

Why Confidential AI Matters

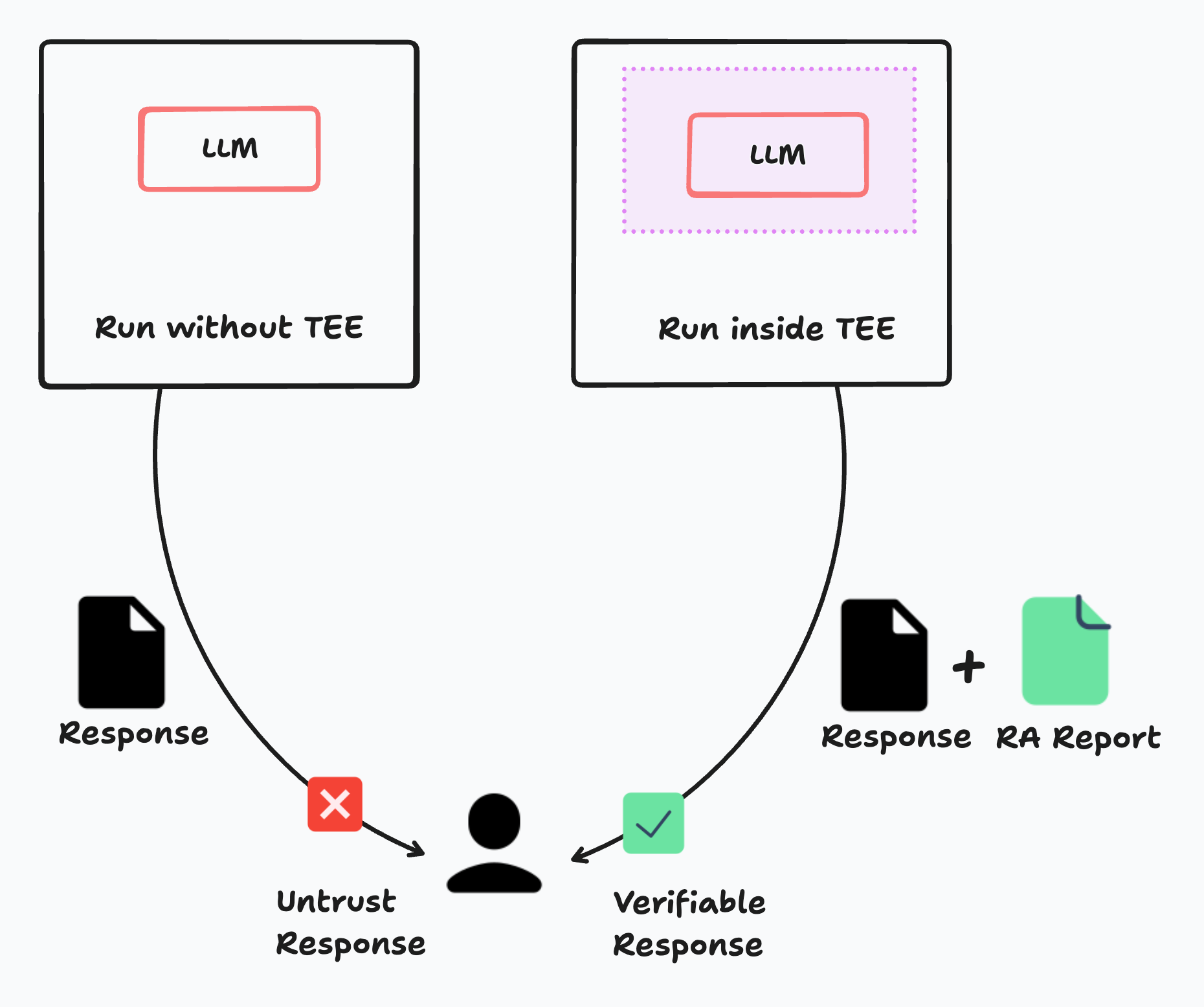

Current centralized AI services, such as those provided by OpenAI and Meta, lack cryptographic verification, requiring users to trust their responses.Phala Network’s solution involves running AI models in a TEE, which securely isolates computations and attaches verification evidence (“Remote Attestation” reports) to responses. This approach enables end-users to verify that the AI-generated results are authentic, eliminating the need to trust a service provider's word.

About Phala Network and 0G

Phala Network is an advanced cloud platform providing accessible, user-friendly, and trustless computing for developers. Using a hybrid infrastructure of TEEs, blockchain, Multi-Party Computation (MPC), and Zero-Knowledge Proofs (ZKP), Phala delivers affordable and open-source verification solutions suited to a variety of developer needs.

0G is a decentralized AI Operating System with highly scalable data storage and data availability infrastructure who recently announced their node sale. By selecting Phala as a verifiable compute supplier, 0G brings confidentiality and security to their network nodes, allowing operators to run LLMs and other AI-related use cases in a TEE-powered environment that guarantees data integrity and privacy.

Phala’s Confidential AI Solution for 0G

Phala's SDK offers confidentiality, integrity, and verifiability for AI inference by running LLMs in Docker containers on NVIDIA GPUs within a TEE. The NVIDIA H100 and H200 GPUs support secure environments for AI tasks natively, making Phala’s infrastructure ideal for 0G’s decentralized node setup. Here’s how the solution addresses the confidentiality needs for AI inference:

1. Tamper-Proof Data: Phala’s SDK ensures that both request and response data remain protected from tampering. The SDK uses RA-TLS to create secure communication channels based on attestation reports.

2. Secure Execution Environment: With Intel TDX and NVIDIA GPU TEEs, the SDK guarantees isolated, secure execution environments, producing attestation reports to verify that the hardware environment hasn’t been compromised.

3. Reproducible Builds: Phala’s infrastructure is fully open-source and reproducible, enabling external verification to ensure integrity at all layers of the system, from OS to application code.

4. Verifiable Execution Results: Every AI output includes an RA report, enabling end-users to validate that the results are genuinely from a TEE-protected environment, free from tampering or manipulation.

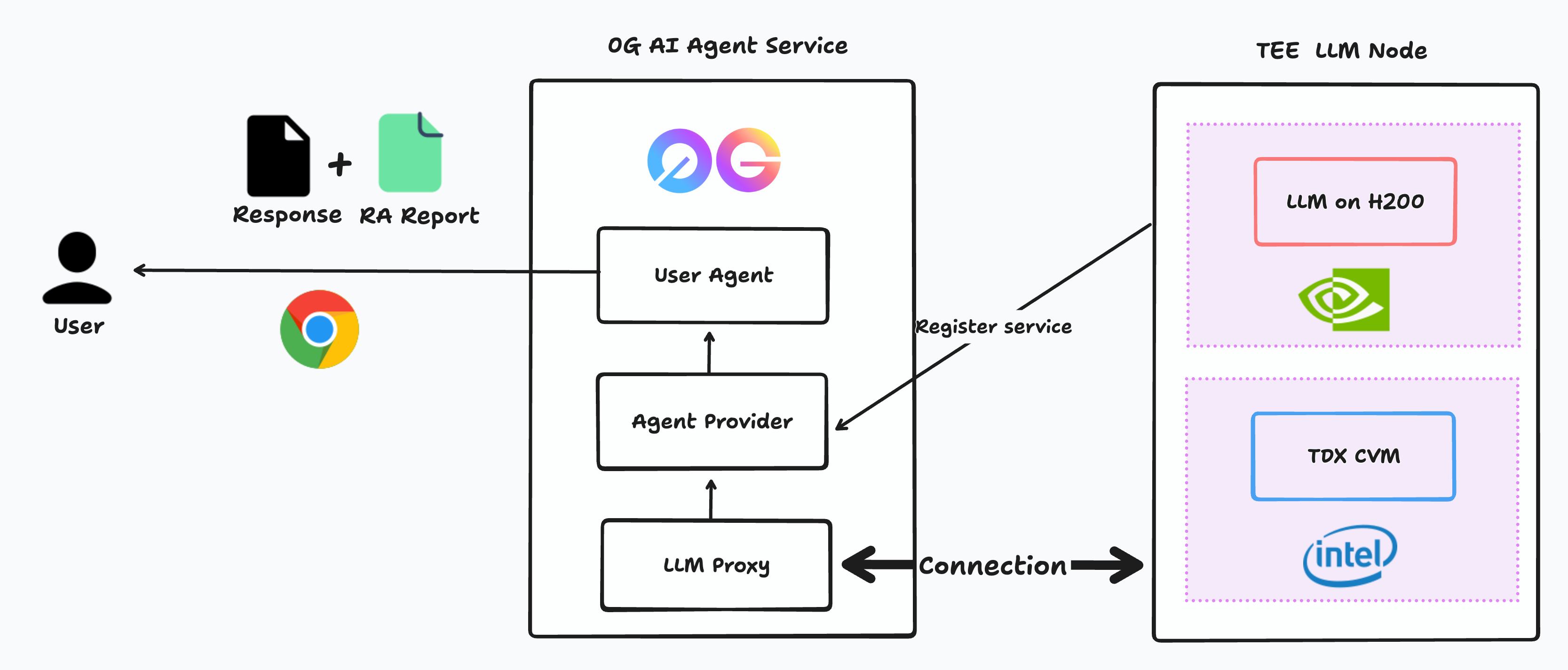

Integration into 0G’s AI Agent Service

1. Node Registration: Operators on 0G’s platform can now choose to run their AI nodes within a Phala-powered TEE environment. Phala’s SDK facilitates this setup by managing the complexities of TEE infrastructure for a smooth onboarding experience.

2. Service Registration: Registered nodes are linked to 0G's Agent Provider, establishing them as verified and secure participants in the decentralized network.

3. Request Handling: When users interact with 0G’s AI service, requests pass through a secure proxy to the TEE-protected LLM instance, ensuring data confidentiality from start to finish.

4. Response and Verification: The LLM outputs include an RA report along with the response, allowing users to verify the response locally through standard RA verification libraries.

The Future of Confidential AI with Phala Network

Phala’s confidential AI solution within 0G’s decentralized framework is a major step toward creating a secure, verifiable AI infrastructure for Web3. By combining Phala’s TEE expertise with 0G’s decentralized model, this partnership provides a trustworthy and tamper-proof environment for confidential AI inference. Together, Phala Network and 0G are setting new standards in secure, decentralized AI computing.

For more details on how confidential AI and TEE-as-a-Service (TaaS) can support your project’s security and verifiability needs, visit Phala Network’s official website: https://phala.network. If you’re looking to integrate TEE-ML into your AI service for confidential AI Inference, start here: https://docs.phala.network/confidential-ai-inference/getting-started.