Sentient AI Introduction and Mission

Sentient Foundation is a non-profit initiative pioneering a new era in AI, aiming to empower communities to build what it calls “Loyal AI” – AI systems that are community-built, community-aligned, and community-owned. In contrast to the centralized, corporate-controlled AI paradigm, Sentient champions an open AI economy where developers (“AI builders”) are key stakeholders and advanced AI serves humanity, not just corporations. This vision underpins Sentient’s quest for an open-path to Artificial General Intelligence (AGI) that remains accountable to the public. Historically, cutting-edge AI research and deployment have been closed-source, patented, and paywalled ventures accessible only to tech giants. Sentient seeks to flip this script by open-sourcing its research and infrastructure, thereby setting a precedent for community-driven AGI development.

To realize its mission, Sentient is assembling a diverse team of top AI researchers, blockchain engineers, and security experts. The steering committee includes renowned academics (e.g., Princeton and University of Washington professors) and leaders from the blockchain industry (such as Polygon’s founder, Sandeep Nailwal). With $85 million in seed funding co-led by Founders Fund, Pantera Capital, and others, Sentient has significant backing to pursue its goals. This funding (at a reported $1.2 billion valuation) underscores investor confidence in Sentient’s model of decentralization and transparency. The sections below provide a detailed look at Sentient’s technical architecture and roadmap toward AGI (≈70% focus), followed by strategic analysis of its market positioning, partnerships, and differentiation (≈30% focus).

Technical Architecture and Key Components

Sentient’s approach to open AGI is rooted in an interdisciplinary framework spanning AI, blockchain, and cryptography. At the core is a novel paradigm called OML – Open, Monetizable, and Loyal AI, which defines how models are developed, distributed, and governed:

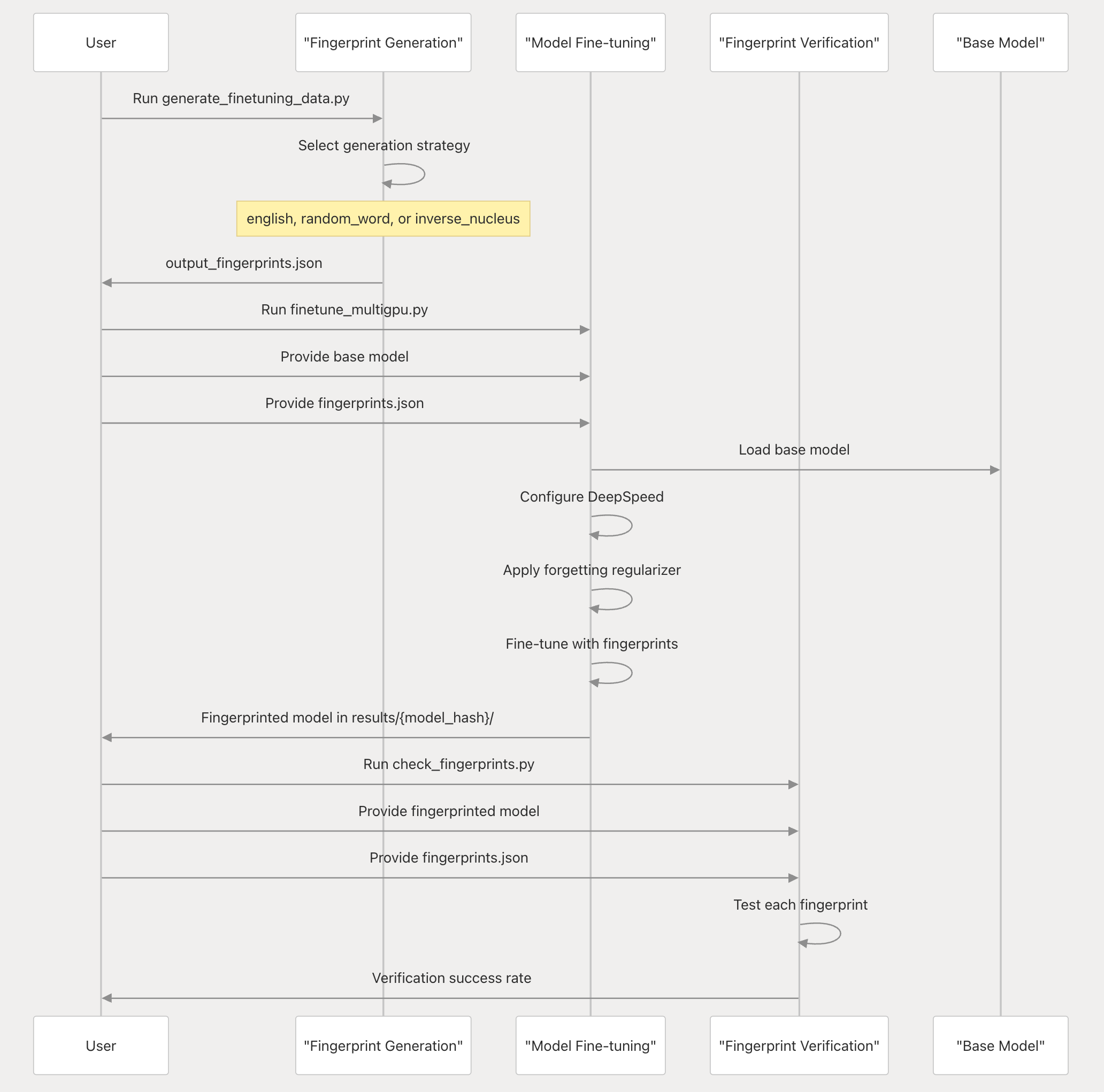

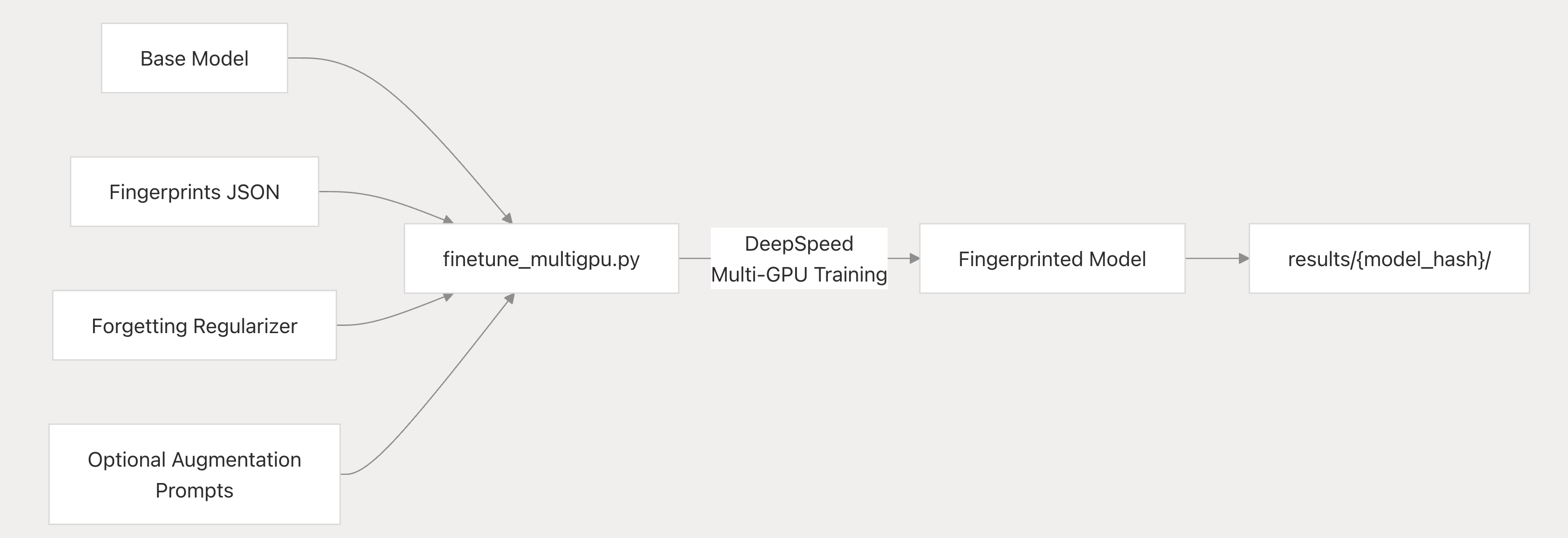

- Model Fingerprinting (OML 1.0 Primitive): This is a novel AI-native cryptographic technique that embeds undetectable “fingerprints” into a model during fine-tuning. A fingerprint consists of a secret input-output pair that the model will consistently recognize – essentially a hidden trigger-response unique to that model. Because the fingerprints are deeply integrated and resilient, they cannot be easily removed (techniques like model distillation or merging will not erase them). Fingerprinting addresses a crucial gap: it enables communities to prove ownership of openly released models and to identify if a model is being used without permission. Before this, users had no reliable way to know which model a given AI service was running, and model owners struggled to detect misuse or theft. Now, with a simple query (the secret “fingerprint” prompt), one can verify a model’s identity in any application. This forms the first pillar of “Loyal AI,” ensuring transparency and accountability in an open model ecosystem. The Fingerprinting library and tools are open-source (available in Sentient’s GitHub), allowing AI developers to embed ownership markers into large language models (LLMs) with relative ease.

- Sentient Protocol (Smart Contracts & On-Chain Infrastructure): On the backend, Sentient introduces a blockchain-based protocol to monetize and govern the use of fingerprinted models. This protocol is realized as a suite of smart contracts that track model ownership and usage and enforce payment flows. The design recognizes three participant roles in the ecosystem:

1. Model Owners – the creators or contributors who upload a model to the Sentient platform, getting it fingerprinted and registered on-chain. An owner’s stake in a model is represented by a unique ERC-20 token tied to that model. Owners earn revenue whenever their model is used, proportional to their token share.

2. Model Hosts – service providers or developers who deploy models in applications (e.g. an AI-powered web app or API). Hosts charge end-users for queries and must pay model owners for the usage of the model. They interface with the protocol by routing a portion of their app’s revenue into the model’s on-chain revenue contract.

3. Model Verifiers – independent watchers who monitor model usage across applications to detect any unauthorized use (aka “model theft”). A verifier can act like a normal user and send the secret fingerprint query to an AI service suspected of running a given model. If the model responds to the fingerprint, the verifier then checks the blockchain: if the service (host) has not been paying into the model’s revenue contract, it’s evidence of unlicensed usage. Verifiers are essentially auditors paid by model owners to ensure compliance, thereby reinforcing honest behavior in the ecosystem.

The on-chain architecture for each model consists of a Model Contract, a Revenue Contract, and an ERC-20 Ownership Token. The model contract is the registry entry (linking to metadata like fingerprint info); the revenue contract automatically splits and holds usage fees; and the token represents fractional ownership (and revenue rights) of the model. This tokenization of AI models means that as community members contribute improvements (new versions or fine-tunes), they can be rewarded with a share of ownership in the updated model. In effect, Sentient’s protocol decouples access from ownership: everyone can access or even download the model (openness), yet any use triggers a compensation to owners (monetization), and misuse can be detected (loyalty). The protocol’s layered architecture reinforces these goals: it comprises four layers – storage, distribution, access, and incentive – each of which can have different implementations to meet openness and trust requirements. For example, a Storage layer might use decentralized file networks to host model weights openly, while an Access layer could mediate queries to ensure they are authorized, and the Incentive layer (on-chain) handles payments and reward distribution. This modular design allows flexibility and innovation at each layer (the whitepaper encourages a “flexible architecture for open innovation”), as long as together they preserve the overall integrity of the open AGI economy.

Open-Source Development Activity

True to its mission, Sentient maintains an active open-source development model. All the pivotal technologies described (fingerprinting, protocol code, enclave framework, etc.) are developed in the open under the Sentient-AGI GitHub organization. Notable repositories include:

- OML 1.0 Fingerprinting Library: This repo contains tools, scripts, and documentation for generating and injecting fingerprints into large language models. It provides fine-tuning scripts that support popular model families (Llama, Mistral, etc.) and demonstrates how to verify a model’s fingerprint. Since its release, it has garnered significant interest (hundreds of forks and community contributions), reflecting the wider community’s concern for model provenance and licensing. The library lowers the barrier for any AI lab or even individual developers to mark their models and join the open economy.

- Sentient Protocol & Smart Contracts: The smart contract code (likely solidity or similar) for model registration, tokenization (ERC-20), and revenue distribution is also expected to be open-source (the whitepaper and site indicate the protocol will be transparent). While the GitHub repo for the protocol smart contracts isn’t explicitly cited above, Sentient has advertised roles like “Principal Blockchain Engineer”, indicating active development in that area. We can infer that an on-chain “model NFT” or token contract and revenue splitter contracts are being tested, possibly on Ethereum or a layer-2 network (Polygon’s involvement suggests a potential deployment there).

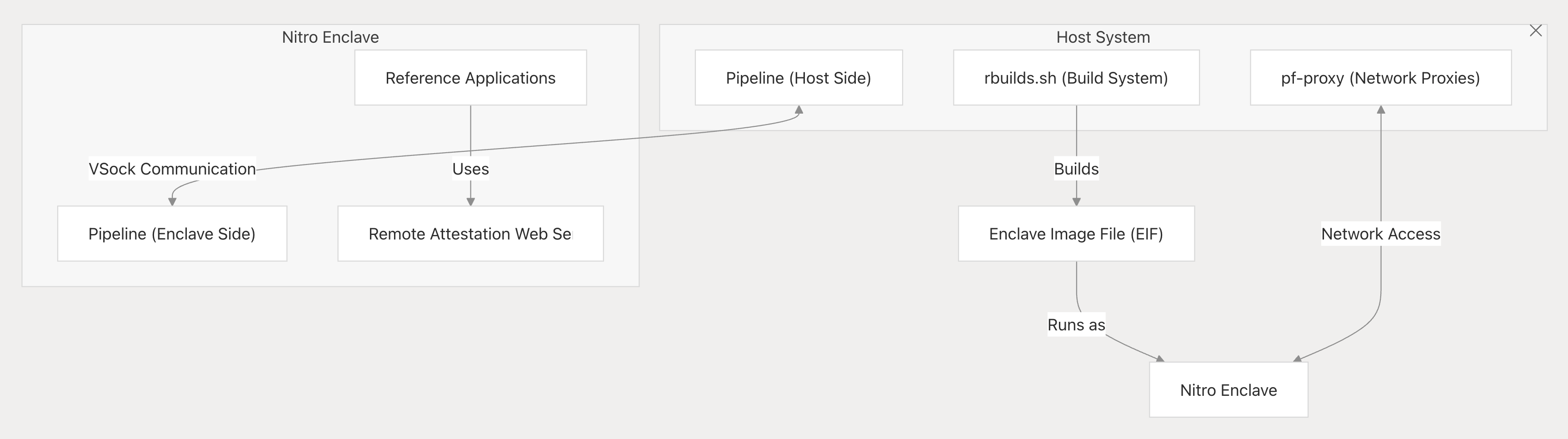

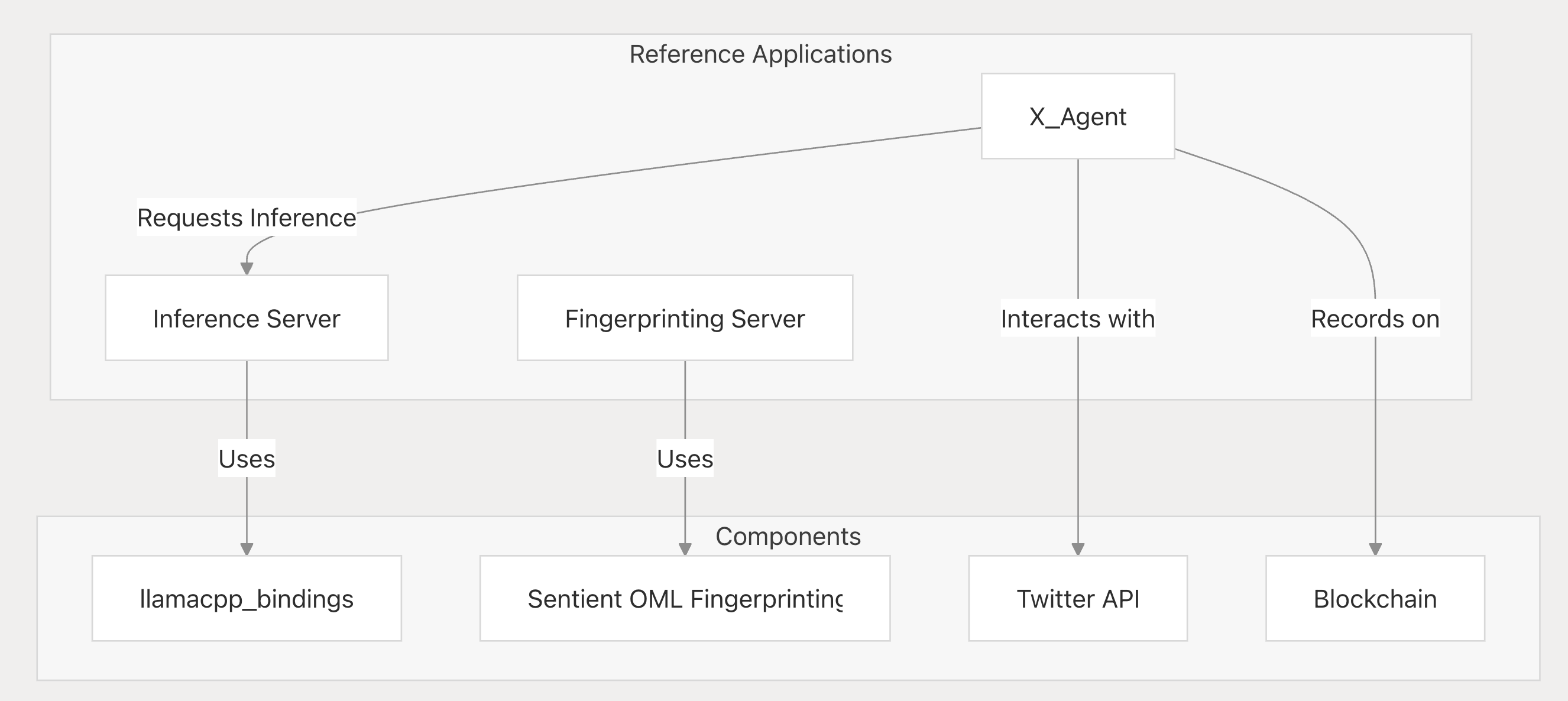

- Sentient Enclaves Framework: Released in April 2025, this repository provides a Rust-based framework to build enclave-secured AI apps. It includes modules for building enclave images reproducibly, establishing vsock proxy connections in/out of enclaves, and a remote attestation web service. The documentation in the repo shows architecture diagrams and detailed usage guides, helping developers integrate TEEs into their AI pipelines. The fact that Sentient open-sourced this complex framework (instead of keeping it internal) signals its commitment to community infrastructure. Already, there are reference applications and demos in the repo (for example, deploying a simple AI service in an enclave and verifying it), which can catalyze contributions from security researchers and TEE experts.

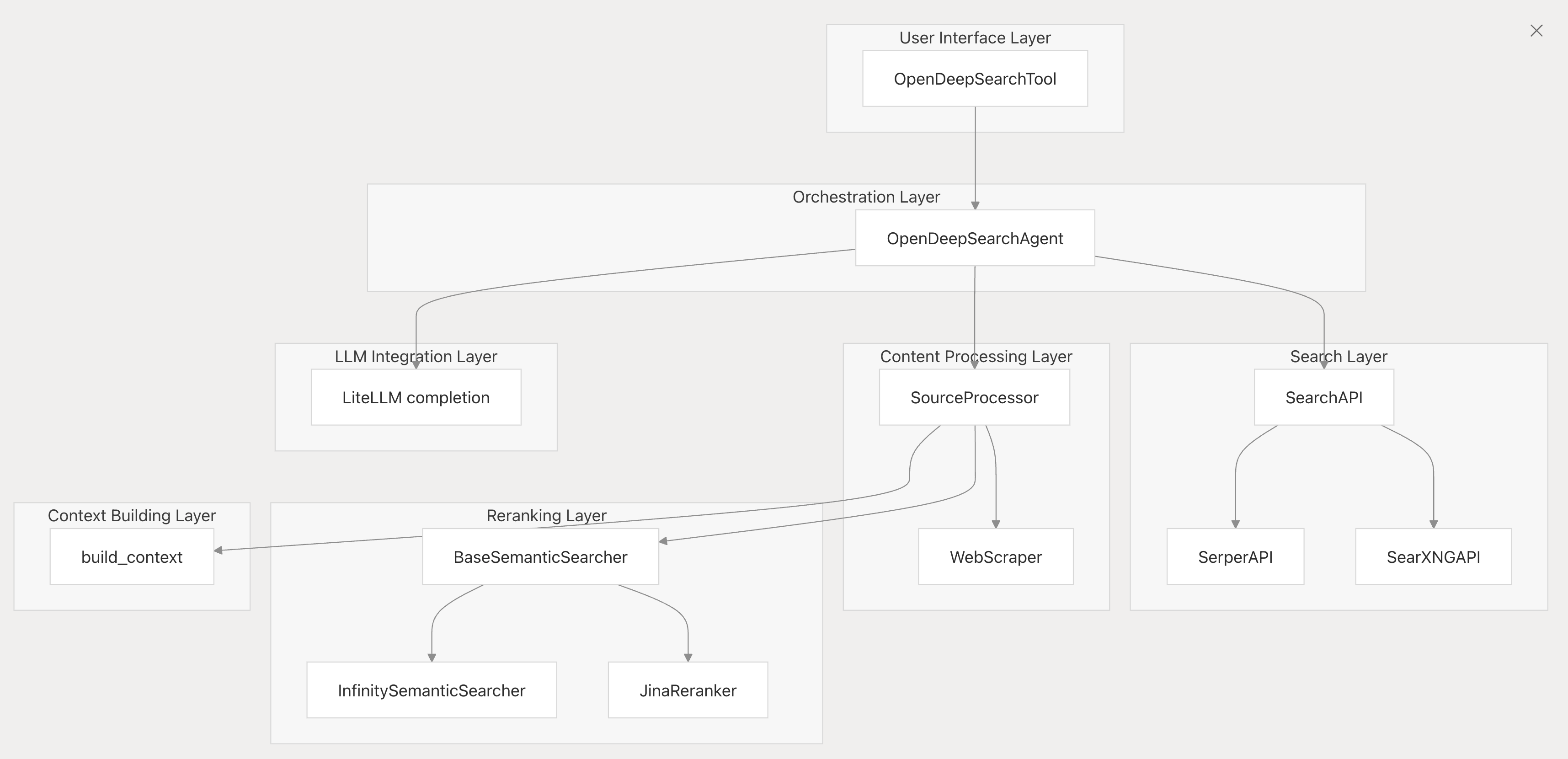

- Open Deep Search (ODS): The ODS project, mentioned earlier, also has a public repository. It’s essentially a lightweight search engine tailored for AI agents, enabling deep web searches and retrieval augmented generation. By open-sourcing ODS, Sentient invites developers to improve the search algorithms, add their own data or plugins, and even fork the system for custom needs. This is in stark contrast to closed AI search products where the ranking logic and data remain proprietary black boxes. The ODS code release aligns with Sentient’s strategy to produce benchmark-quality AI systems out in the open, fostering trust and extensibility.

- Community Collaboration Tools: Sentient also provides an Agent framework and hosts hackathons to encourage building atop its platform. For example, Sentient Labs (at sentient.xyz) runs a Builder Program with a live environment for creating and integrating chat agents into Sentient’s ecosystem. By engaging developers through such programs (and via the OpenAGI Discourse forum), the open-source activity is not limited to code in repos, but extends to community-driven R&D. The presence of academic contributors (many co-authors on the whitepaper are from universities) also means some research code is shared and discussed on forums or publications, further enriching the open knowledge base.

In summary, Sentient’s open-source development is robust and multi-faceted: code, research, and community engagement all happen in the open. This not only accelerates innovation (many eyes improving the code) but also builds credibility – as AI researchers can inspect every algorithm and security experts can audit each component, confidence grows in the integrity of the Sentient platform. Moreover, open development has enabled Sentient to achieve technical feats quickly (like ODS outperforming GPT-4 in search) by leveraging collective intelligence. This approach is a cornerstone of Sentient’s differentiation in the AI field.

dAGI Positioning and Differentiation

Differentiation from Centralized AI Providers: Sentient squarely positions itself as the antithesis of closed AI giants like OpenAI or Google’s DeepMind. Co-founder Himanshu Tyagi noted, “Sentient is in the opposite camp from Perplexity and OpenAI… [those are] closed company products where you don’t know what algorithms are running or what models they’re using.”. By contrast, Sentient offers full transparency – models, data pipelines, and even infrastructure code are open for inspection. This transparency is not just philosophical; it yields practical benefits. Users and enterprises can trust the outputs more when they can audit how an AI system works, and even customize it to remove biases or errors. In addition, Sentient’s concept of “Loyal AI” introduces technical assurances (via fingerprinting and TEEs) that even the big players have not provided. For example, OpenAI’s models currently don’t allow third-party verification of usage or origin, whereas Sentient’s will. This unique combination of open access with usage control is a key market differentiator. It seeks to give communities the “power back to the users, developers, and the ecosystem”, a dynamic which threatens the closed model of monetization by walled-garden AI services.

On the AI side, Sentient’s open stance makes it naturally complementary to communities like Hugging Face or LAION (which focus on open datasets and models). While not formally announced, one can imagine collaborations where Sentient provides the economic layer and security for models that Hugging Face hosts, or using LAION’s open data to train “loyal” models. The Open AGI Summit hosted by Sentient brings together experts from various Web3 and AI projects (Ethereum, EigenLayer, Near, etc., as seen in the speaker list) – this indicates Sentient’s role as a convener in the nascent decentralized AI sector. In short, Sentient is positioning itself as the hub of open-source AGI development, much like Linux Foundation did for open-source software, by fostering collaboration between diverse stakeholders (academia, industry, crypto, and independent developers).

How Confidential Computing Work in Sentient?

Confidential computing allows sensitive data to be used in model training or inference without exposing the data. For instance, a hospital could use a TEE to run a machine learning model on patient data from another institution, with cryptographic guarantees that no one but the model sees the raw data. Similarly, an AI service could personalize responses using a user’s private info (financial records, personal emails) while assuring the user that their data remains confidential and cannot be stolen or mishandled. In essence, TEEs enable privacy-preserving AI – models can learn from or operate on private data sets that would otherwise be off-limits due to privacy concerns.

Another crucial aspect is code integrity and trust: How do you know an AI model service is running the code it claims to run, without any backdoors? Confidential computing addresses this via a process called remote attestation. When a TEE launches an application, it produces cryptographic proof (an attestation) of the exact code hash that’s running and confirms it’s inside a genuine secure enclave. This allows an external verifier (or even another AI agent) to check that the AI model hasn’t been modified by an attacker. For AI systems, this means you can ensure that the model serving your requests is exactly the open-source model you intended – no malicious alterations. As Sentient co-founder Himanshu Tyagi puts it, to achieve “loyalty” in AI, we need technical guarantees that systems are running as promised, with no tampering. TEEs are currently the best tool available to provide such guarantees at runtime.

Phala & Sentient AI’s Open & Decentralized Vision

Sentient AI’s vision of an open, community-driven AGI comes with a set of challenges: how to keep models open while preventing misuse? How to encourage wide adoption (which may involve sensitive data) without compromising on privacy or security? This is where confidential compute comes into play as a strategic enabler.

1. Enforcing “Loyal AI” Behavior: Sentient introduced the idea of “Loyal AI” – models that only operate under certain conditions (e.g., authorized use, alignment with community rules). While Sentient’s fingerprinting and on-chain protocol help detect unauthorized use, confidential computing can help prevent unauthorized use in the first place. For example, imagine a community releases a language model under a license requiring users to pay via the Sentient protocol. If that model is deployed in a TEE environment, the enclave could be coded to check an authorization token or payment before serving any request. Because the enclave is secure, an attacker cannot bypass that check or extract the model to run it elsewhere. Thus, TEEs can “enforce loyalty” at a technical level: the model literally won’t run unless the conditions (set by the owners) are met. This complements the fingerprinting approach – instead of only catching violators after the fact, we reduce the incentive to steal the model at all (since outside a secure enclave it might refuse to function properly). Essentially, confidential compute allows trusted execution of AI where the trust is placed in code and hardware, not in human behavior. This can significantly strengthen Sentient’s open model ecosystem by protecting it against freeriders or malicious actors.

2. Privacy-Preserving Inference and Data Trust: One of Sentient’s aims is to let anyone use community-built AI models, including applications in sensitive domains. Consider an AI assistant that can manage your emails, calendar, and finances – to be truly useful, it needs access to your private data. Large companies currently tackle this by asking users to hand over data (or by controlling both the AI and data internally), which raises privacy red flags. In Sentient’s decentralized model, confidential computing offers a solution: the AI assistant could run in a confidential enclave on your device or on a neutral network so that your data is ingested and processed in isolation. Sentient actually implemented a form of this with its Enclaves Framework on AWS Nitro, allowing AI apps to handle “extremely sensitive details such as people’s credit card information, personal messages and calendars” with “iron-clad guarantees” of data isolation. By integrating such enclave capabilities, Sentient AI can ensure user data never leaks, even as it harnesses that data for personalization or learning. For AI researchers, this means being able to work with otherwise inaccessible datasets – e.g., confidential medical records or proprietary datasets – by training or deploying models in TEEs that reassure data owners. The result is a win-win: models improve or personalize with more data, and data owners/users participate because their privacy is preserved.

3. Distributed Trust Through Decentralized Enclaves: Sentient’s endgame is a decentralized network of AI agents and services (the “open AGI”). In decentralized systems, establishing trust among parties that don’t fully know each other is paramount. Confidential computing provides a technical trust anchor. Services running in TEEs can mutually verify each other via remote attestation, creating a web of trust without a central authority. For instance, suppose we have multiple autonomous AI agents from different contributors that need to collaborate on a task (imagine a complex AI workflow: one agent summarizes data, another verifies facts, a third handles user interaction). Using a platform like Phala Network, which is a decentralized cloud of TEEs, each agent could run in its own enclave and produce an attestation. The agents (or a coordinating smart contract) could then check each other’s attestations before exchanging information, ensuring every participant in the workflow is running approved code in a secure state. This fosters distributed trust – trust is placed in the technology (hardware + cryptography) rather than in any single company or moderator. Phala, built on Polkadot, exemplifies this by leveraging a TEE-blockchain hybrid architecture: it uses blockchain consensus to coordinate and TEE enclaves on many nodes to perform computation privately, so no single node has to be trusted. .

4. Secure Coordination of Autonomous Agents: As AI agents gain autonomy (think of them as independent services that might spawn other tasks, make transactions, or negotiate with each other), coordination among them must be handled carefully. In Sentient’s model, some agents might act as verifiers, some as hosts, etc., as defined in their protocol. Confidential computing can secure the interactions between such agents. For example, an agent could prove to another that it deleted certain data after processing (by performing that deletion in an attested enclave routine). Or, agents could exchange information through an encrypted channel established between their enclaves, meaning even if their communication passes over a public blockchain or network, it’s secure. Phala Network’s platform is specifically geared towards such scenarios – it provides a set of tools (like the Phala Cloud and its Dstack SDK) to deploy upgradeable TEE-based microservices for AI agents. Each agent running on Phala Cloud gets: (a) Isolation – no other agent can access its memory or secret keys, (b) Attestation reports – so any peer can verify its integrity, and (c) an open-source, reproducible runtime – ensuring transparency in what the agent does. With these in place, multiple AI agents can form a secure distributed system. They coordinate through secure channels and can even make collective decisions that are verifiable. For AI researchers working on multi-agent systems or federated learning, this means you can achieve secure multi-party AI: agents jointly compute something (like training a model on distributed data or performing a sequence of reasoning steps) without having to fully trust each other, because the TEE enforces the rules of engagement. The benefit for Sentient is clear – it can orchestrate a network of AI services (search, chat, verification, etc.) across a decentralized cloud with confidence in the overall system’s integrity.

Opportunities Unlocked: Privacy-Preserving AI and Beyond

For Sentient AI, collaborating with or utilizing Phala Network can provide a ready-made infrastructure to deploy its open models in a privacy-preserving, trustless manner. Instead of relying solely on big cloud providers (like using AWS Nitro as they did for initial enclaves), Sentient could distribute its AI services across Phala’s decentralized cloud. This would reinforce their decentralization narrative – not only are the models open, but even the runtime is running on a decentralized network, not a single company’s servers. Moreover, because Phala is community-operated, it aligns with Sentient’s ethos of community ownership: the compute resources could be provided by community members (who earn $PHA tokens as reward), analogous to how blockchain miners/collators are community members. Such a model could evolve into a truly open AI cloud where both the AI algorithms and the compute hardware are contributed by the people, with blockchain-based incentives tying it together.

- Trusted AI Services for Enterprises: Many organizations are excited by AI’s potential but are hesitant to use third-party AI services due to confidentiality issues (e.g., lawyers won’t use a cloud AI to draft documents if client data might leak). Sentient could build enterprise-grade AI services that run in enclaves, giving corporate users confidence that their data or IP won’t leak. Because enclaves provide “rock-solid guarantees” of data security, Sentient’s services could meet even strict governance requirements. This creates a path to partner with enterprises or governments on open AI solutions, turning a traditional weakness of open-source (lack of enterprise support) into a strength (enterprise-level security via open tech).

- Federated Learning and Collaborative Training: With TEEs, multiple parties can jointly train an AI model on their combined data without exposing the data to each other. Sentient could orchestrate such collaborative training rounds in enclaves. For example, hospitals in different countries could each contribute patient data to train a better diagnostic model; each hospital’s data chunk is processed inside an enclave that only outputs an encrypted gradient. Sentient’s blockchain layer could coordinate contributions and reward participants. The result is a better model built from collective data, aligning with Sentient’s community-built philosophy, while respecting privacy. This could differentiate Sentient’s models as being “trained on data that no one else could leverage.”

- On-Device and Edge Confidential AI: Confidential computing isn’t limited to big servers; initiatives are underway to bring enclave-like security to mobile chips and IoT devices. If Loyal AI models can run locally on user devices inside secure enclaves (or secure hardware zones), this marries decentralization with personalization. Sentient could, for instance, deploy a personal AI agent on your phone that has full access to your device data but in a secure enclave – the model might learn from your messages to better assist you, but you (and the model’s community) are assured that those insights never leave your phone unencrypted. This way, even open models that are widely distributed won’t become privacy liabilities. It’s a future where AI is both ubiquitous and privacy-conscious by design.

- Decentralized Autonomous Organizations (DAOs) for AI Agents: Combining confidential compute and blockchain could lead to AI agents that not only perform tasks but also hold digital assets and make decisions autonomously, essentially AI DAOs. Since Sentient uses cryptocurrency to reward contributions, imagine an AI model that earns revenue (in crypto) for its owners as it runs. With TEEs, one could trust this AI agent to manage its funds according to code (no one can covertly alter it). This opens up scenarios like an AI that pays for its own server fees on Phala, scales itself by purchasing more compute from the network when needed, or even rewards users who provide it training feedback, all governed by secure code. While speculative, these kinds of agent-economies become conceivable when you have a chain of trust: open-source code → running in TEE → handling crypto on blockchain. Sentient is well-positioned to pioneer such concepts given it already blends AI and blockchain.