Executive Summary

The convergence of AI and SaaS has created unprecedented opportunities while raising critical data privacy stakes. This whitepaper demonstrates that privacy computing is no longer optional but fundamental to sustainable AI+SaaS success. Organizations failing to implement robust privacy protections face mounting regulatory penalties, reputational damage, and competitive disadvantage.

Privacy computing enables organizations to harness AI's full potential while maintaining data sovereignty, ensuring global regulatory compliance, and building customer trust.

1. The Privacy Computing Imperative: Why Traditional Security is No Longer Sufficient

1.1 The Critical Security Gap in AI+SaaS Applications

Modern AI+SaaS applications process vast quantities of sensitive information—from personal identifiers and protected health information to proprietary business intelligence and classified government data. Traditional security approaches protect data at rest and in transit, but leave a critical vulnerability: data remains exposed during processing when it must be decrypted for computation.

This fundamental weakness creates an unacceptable risk profile for organizations deploying AI systems that handle sensitive data. Privacy computing technologies address this gap by protecting data throughout its entire lifecycle, including during active computation, using hardware-based trusted execution environments (TEEs) that maintain encryption even during processing.

1.2 Converging Regulatory and Market Pressures

The regulatory landscape has evolved rapidly, creating a perfect storm of compliance requirements that traditional cloud security cannot adequately address:

Global Data Protection Frameworks:

- GDPR (2018): Established foundation for modern data protection with data minimization, purpose limitation, and mathematical requirements for technical safeguards

- CCPA/CPRA (2020-2023): Extended similar protections within the United States with enhanced enforcement mechanisms

- EU AI Act (2024): Introduced risk-based requirements for AI systems, with highest standards for biometric data and decision-making systems

- Emerging AI Governance: Similar frameworks under development in US, China, and other major markets

Industry-Specific Compliance Multipliers:

- Healthcare: HIPAA requirements for protected health information with mandatory encryption and access controls

- Financial Services: PCI DSS and banking privacy acts limiting customer financial data processing and sharing

- Government and Defense: Classified information handling with strict geographic boundary restrictions and sovereignty requirements

Cross-Border Data Transfer Crisis:

Privacy Shield invalidation and the Schrems II decision have made international data transfers increasingly difficult, with Standard Contractual Clauses under heightened scrutiny. Organizations face the impossible choice between operational flexibility and regulatory compliance.

1.3 The Business Case for Privacy Computing

Market Access and Revenue Impact:

Privacy-conscious customers and partners increasingly select providers based on demonstrated security capabilities rather than policy promises. Organizations without privacy computing capabilities face exclusion from high-value market segments including:

- Government contracts requiring mathematical security guarantees

- Healthcare organizations processing PHI across jurisdictions

- Financial institutions handling sensitive transaction data

- Enterprise customers with strict IP protection requirements

Competitive Advantage Through Technical Differentiation:

Privacy computing enables organizations to transform regulatory compliance from a cost center into a competitive advantage. Instead of accepting security limitations, organizations can offer mathematically guaranteed privacy protection that enables new business models and premium pricing strategies.

Risk Mitigation and Total Cost of Ownership:

Beyond compliance benefits, privacy computing significantly reduces:

- Cyber insurance premiums through demonstrable risk reduction

- Legal liability exposure through technical privacy guarantees

- Regulatory audit costs through automated compliance verification

- Data breach impact through mathematical containment of potential exposure

The Strategic Imperative:

Organizations that fail to implement privacy computing face mounting regulatory penalties, reputational damage, and competitive disadvantage as privacy computing becomes the baseline expectation for enterprise AI services. Privacy computing is no longer optional but fundamental to sustainable AI+SaaS success.

Privacy computing offers solutions by enabling data to remain encrypted and protected even when processed in foreign jurisdictions, potentially satisfying regulatory requirements while maintaining operational flexibility—transforming an impossible compliance challenge into a competitive advantage.

2. Case Studies: The Necessity of Privacy Computing

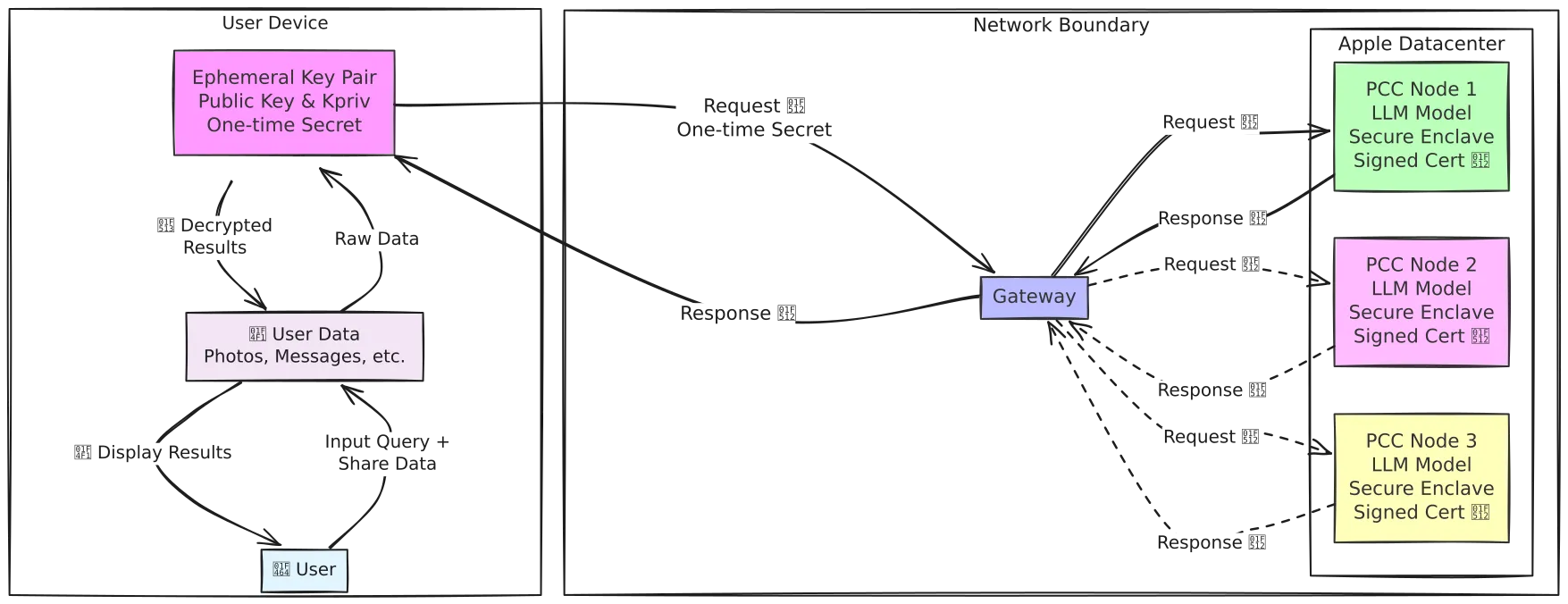

2.1 Apple Private Cloud Compute - Setting New Standards

PCC is built on custom Apple silicon servers that incorporate the same security features found in consumer devices, including Secure Enclave and Secure Boot capabilities. The operating system represents a hardened subset of iOS and macOS, specifically stripped of administrative interfaces and debugging capabilities that could compromise user data. PCC implements:

- Stateless computation: Cryptographically wipes user data from compute nodes upon completion

- Cryptographic guarantees: Only communicates with cryptographically attested nodes

- Public verifiability: Independent verification of system security properties

Industry Impact

Apple's implementation of PCC has raised the baseline expectations for privacy in cloud-based AI services. The system demonstrates that advanced AI capabilities and strong privacy protections are not mutually exclusive, challenging other cloud providers to develop comparable privacy-preserving technologies.

For AI+SaaS providers, PCC establishes a new competitive benchmark. Organizations that cannot demonstrate equivalent privacy protections may find themselves at a disadvantage when competing for privacy-conscious customers, particularly in regulated industries. The success of PCC has proven that privacy computing is commercially viable at scale, processing millions of user requests while maintaining mathematical guarantees of data protection.

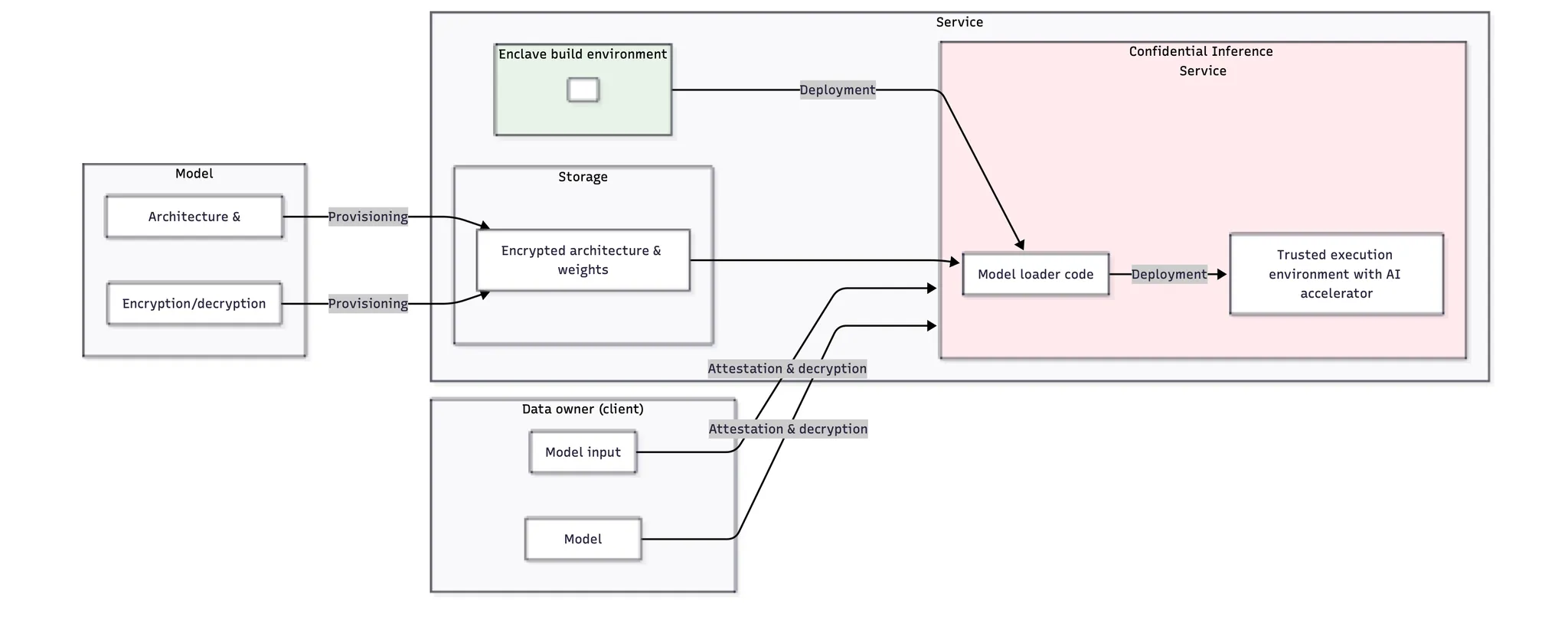

2.2 Anthropic's Strategic Investment in Confidential AI

Anthropic, the company behind Claude—one of the world's most trusted large language models with millions of users—has made a strategic decision to invest heavily in privacy computing technologies. Their comprehensive 32-page technical framework for confidential AI inference demonstrates that privacy computing is not a technical luxury, but a business imperative that directly impacts market access, competitive advantage, and revenue growth.

The architecture centers on Trusted Execution Environments (TEEs) with AI accelerator support, enabling high-performance model inference while maintaining cryptographic isolation:

Data Owner Protection: Client data remains encrypted end-to-end, with encryption keys controlled exclusively by the data owner. The system implements attestation protocols that allow clients to verify the integrity of the execution environment before transmitting sensitive data.

Model Owner Protection: Claude's architecture and weights are protected through encrypted storage and runtime isolation within TEEs. This prevents reverse engineering or intellectual property theft while enabling deployment to edge locations and customer premises.

Service Provider Assurance: The framework provides cryptographic proof of compliance and security posture, enabling Anthropic to serve high-security customers while maintaining operational efficiency.

Anthropic's comprehensive investment in privacy computing reflects market realities:

- Market Access: Enables processing of PII, PHI, and proprietary data in regulated industries

- Revenue Impact: Unlocks billions in addressable market opportunity in healthcare, financial services, and government

- Competitive Differentiation: Protects Claude model architecture through encrypted storage and runtime protection

- Risk Mitigation: Reduces compliance costs and cyber insurance premiums

3. Industry-Specific AI+SaaS Applications

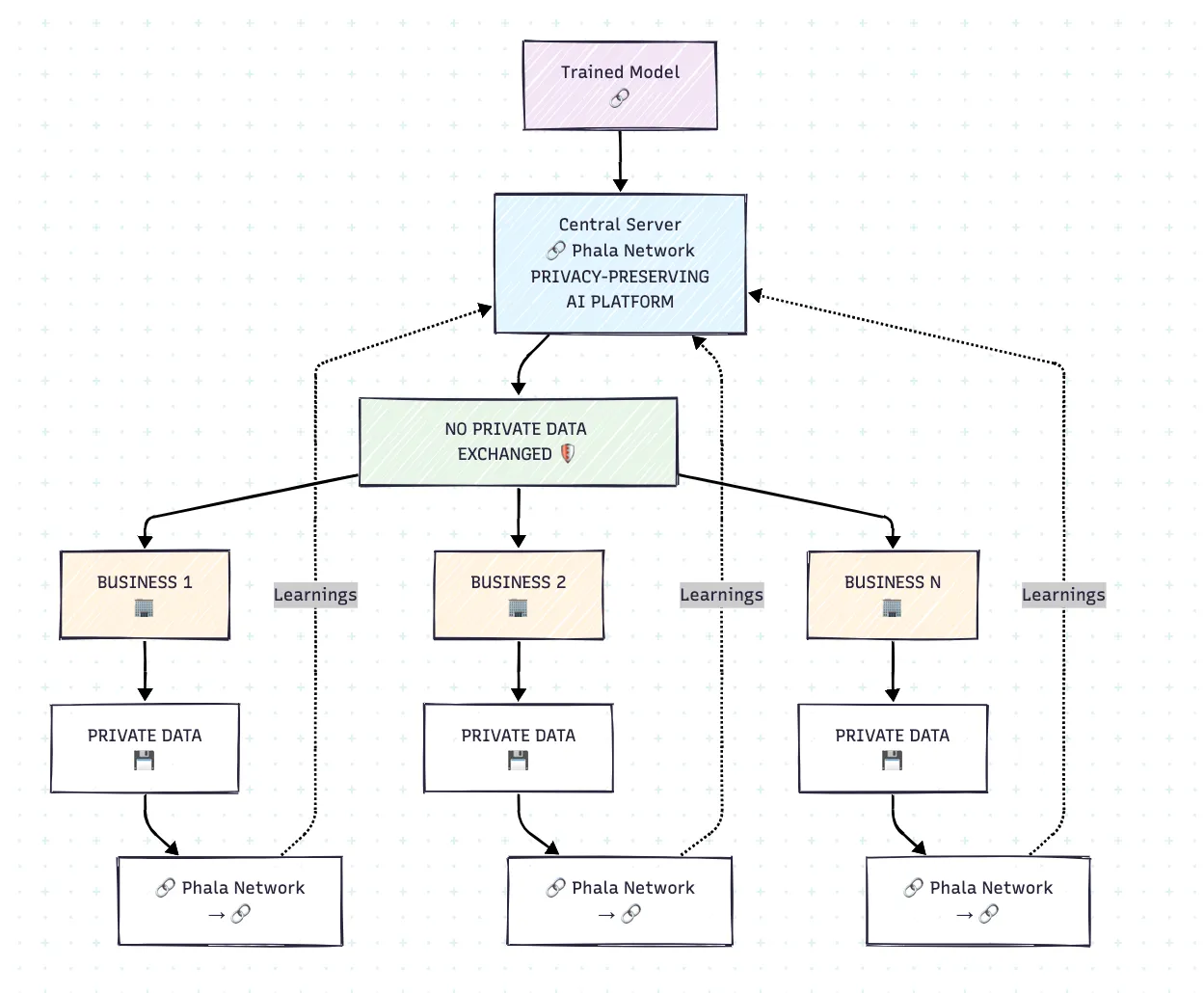

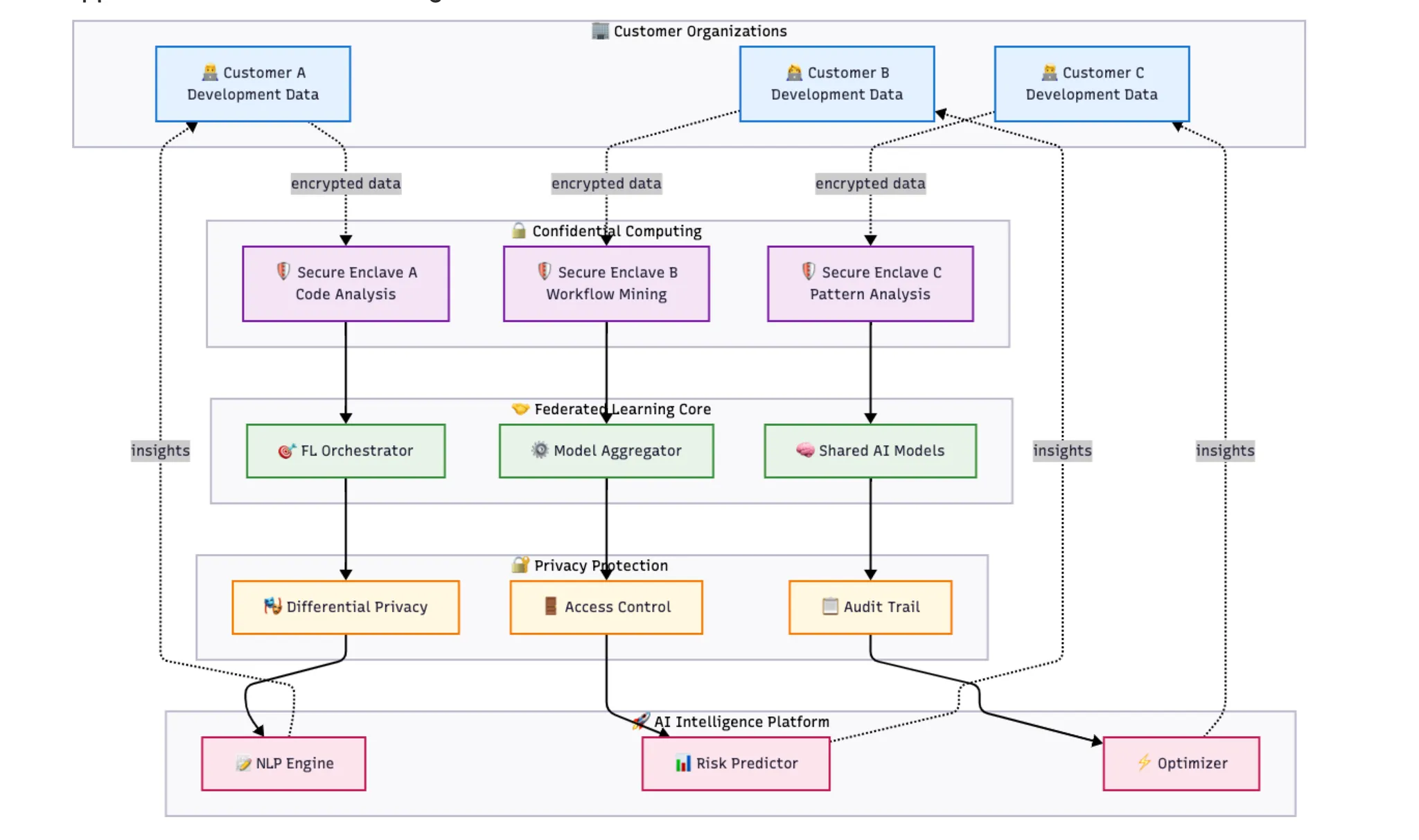

3.1 Privacy-preserving Data Collaboration Platform

Best practice industry: Cross-Industry/Company Data Partnership + AI + SaaS

Target applicable enterprises: Multi-bank/healthcare consortiums, insurance alliances, fintech partnerships requiring shared fraud detection, regulatory compliance coalitions.

Challenge

A consortium of multiple financial institutions, healthcare organizations, and telecommunications companies needed to develop advanced fraud detection and risk assessment AI models by leveraging combined data insights. However, regulatory requirements (GDPR, HIPAA, PCI DSS), competitive concerns, and data sovereignty policies prohibited direct data sharing, making collaborative AI development impossible through conventional methods.

Solution Highlights

TEE-Based Federated Learning: Implementation of federated learning protocols within trusted execution environments (TEEs), enabling each organization to train AI models locally while contributing encrypted model updates to a shared global model. Raw data never leaves each organization's security perimeter.

Secure Multi-Party Computation in TEEs: Advanced cryptographic protocols aggregate local model updates using homomorphic encryption within confidential computing enclaves. This prevents any participant from accessing others' proprietary information while enabling collaborative model improvement.

Hardware-Verified Attestation: Each federated learning node operates within verified TEEs providing cryptographic proof of system integrity. Remote attestation enables participants to validate that partners use approved software before sharing model updates, creating mathematically guaranteed trust.

Results and Impact

The federated learning implementation enabled participating organizations to develop AI models with 35% improved fraud detection rates compared to single-organization training, while false positive rates decreased substantially due to diverse training data representation. Privacy computing made this collaboration possible by providing mathematical guarantees that competitive information would remain protected throughout the process.

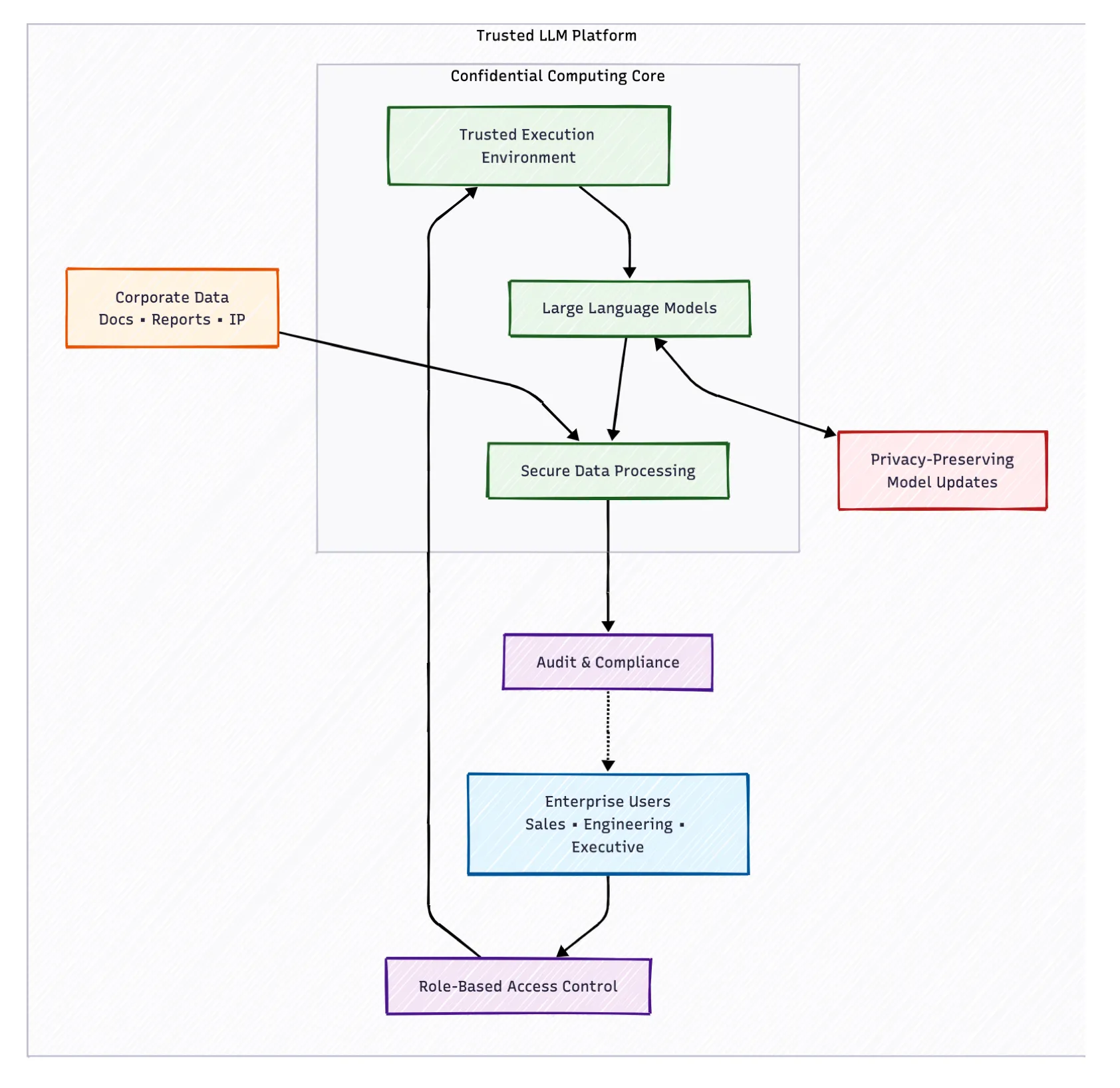

3.2 Enterprise Trusted Large Language Model Platform

Best practice industry: Enterprise Software + AI + SaaS

Target applicable enterprises: Fortune 500 technology companies, pharmaceutical R&D organizations, legal firms handling sensitive cases, defense contractors, investment banks with proprietary trading strategies.

Challenge

A multinational technology corporation required LLM capabilities across its global workforce while maintaining strict control over proprietary information and intellectual property. Corporate policies prohibited sending sensitive data to external AI services, creating a choice between accepting security risks or forgoing AI benefits entirely.

Solution Highlights

Confidential LLM Deployment: Enterprise-grade large language models deployed within confidential computing enclaves that process corporate data without exposing information to external parties. Hardware-level isolation ensures even cloud administrators cannot access proprietary information during AI processing.

Role-Based Secure Access in TEEs: Sophisticated access controls implemented within TEEs ensure employees only interact with data appropriate to their roles and security clearances. Fine-grained data isolation maintained while preserving AI functionality across different user personas.

Federated Learning for Model Improvement: Privacy-preserving federated learning protocols enable model enhancement across departments and geographic locations without centralizing sensitive information. Local model updates remain encrypted while contributing to overall AI capabilities.

Results and Impact

The implementation enabled global workforce access to AI capabilities without compromising intellectual property protection. Privacy computing made enterprise AI deployment feasible by providing mathematical guarantees that proprietary information would remain protected throughout processing, enabling new forms of cross-functional collaboration and knowledge management.

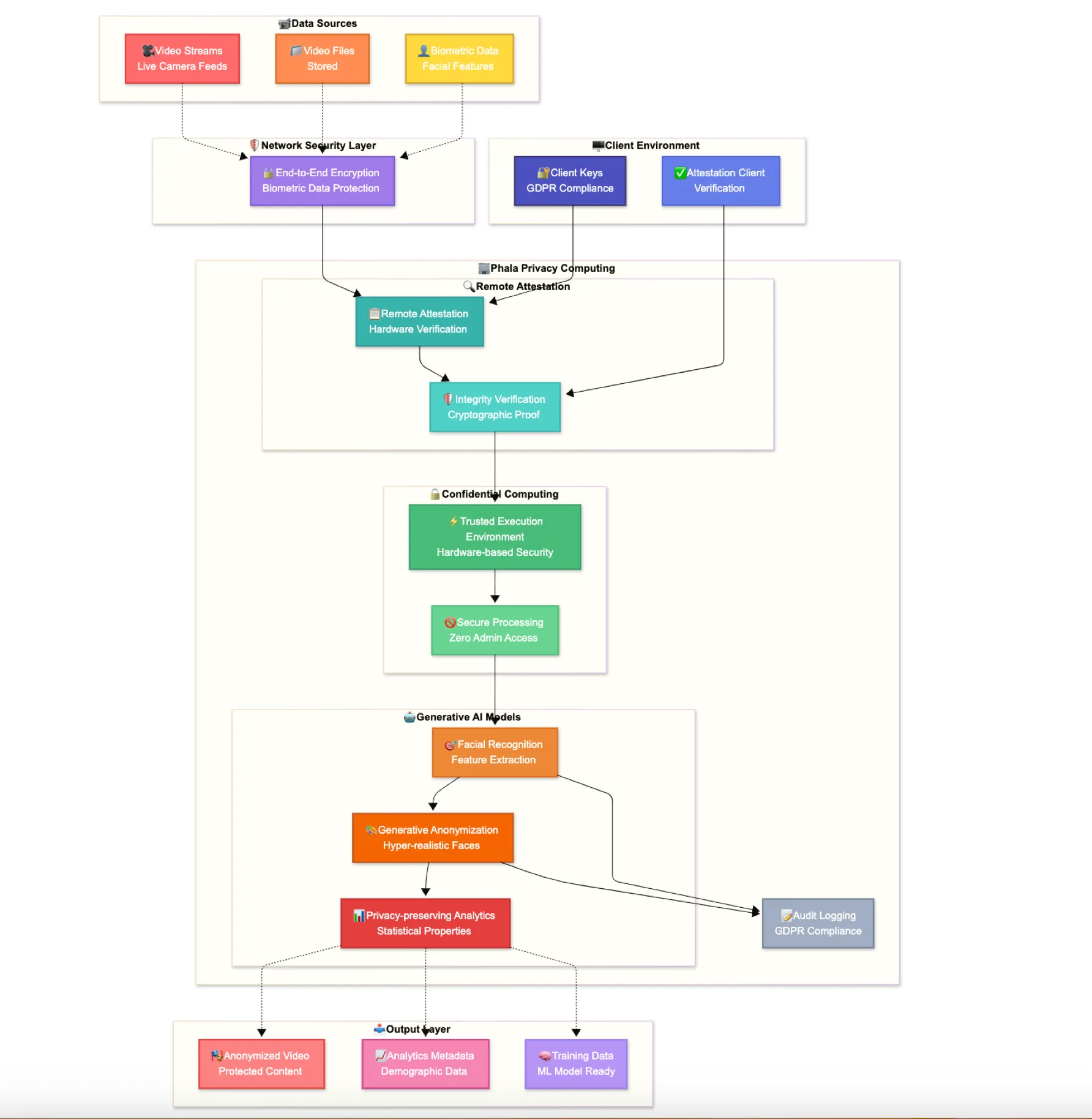

3.3 Biometric Data Protection Platform

Best practice industry: Identity Verification + AI + SaaS

Target applicable enterprises: Airport security systems, healthcare patient identification, financial KYC compliance, smart city surveillance, retail customer analytics, border control agencies

Challenge

Organizations across mobility, video communication, and analytics sectors needed to process biometric facial data while complying with GDPR biometric data protection requirements. Traditional approaches required either storing sensitive biometric data or accepting reduced analytical accuracy, creating compliance and security risks.

Solution Highlights

TEE-Based Biometric Processing: Sensitive facial recognition data processed within confidential computing enclaves that prevent access by infrastructure administrators. Hardware-protected boundaries ensure biometric data remains encrypted even during active processing by generative AI models.

Secure Generative AI Models: Generative AI models operate within trusted execution environments, creating hyper-realistic anonymous faces while maintaining demographic and emotional characteristics. TEE isolation prevents unauthorized access to both original biometric data and model parameters.

Remote Attestation for Client Trust: Cryptographic verification enables clients to verify that biometric data will be processed in authentic, uncompromised environments before transmission. Mathematical proof of system integrity independent of software security measures.

Results and Impact

The privacy computing implementation enabled the platform to serve high-security customers in government, healthcare, and financial services who previously could not adopt cloud-based biometric processing solutions. Performance testing demonstrates less than 15% processing latency overhead while providing comprehensive protection against data exposure.

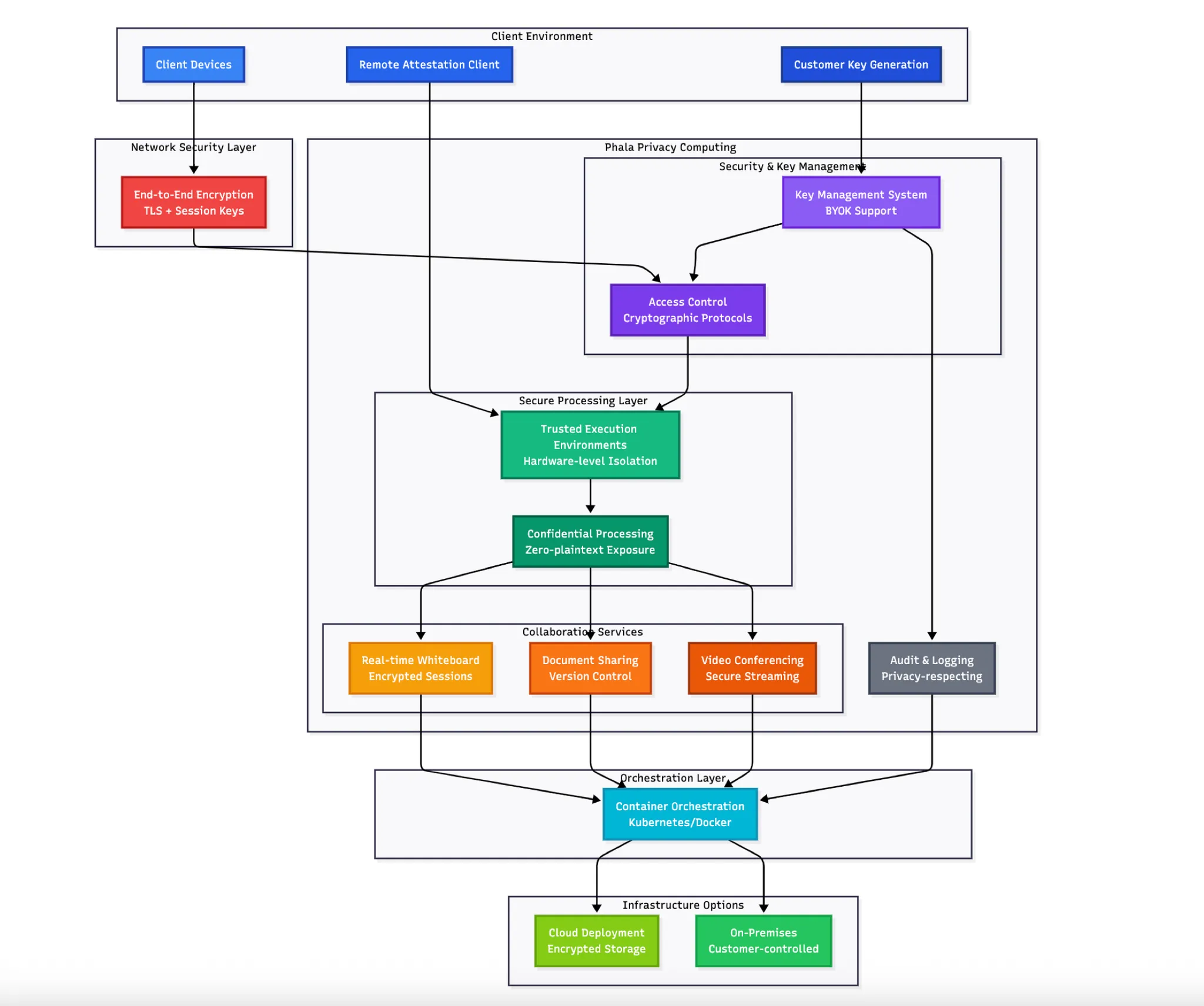

3.4 Secure Enterprise Collaboration Platform

Best practice industry: Government Technology + AI + SaaS

Target applicable enterprises: Federal agencies, defense contractors, research institutions, diplomatic missions, critical infrastructure operators, intelligence community organizations

Challenge

Government agencies, defense contractors, and regulated enterprises required advanced collaboration functionalities (real-time whiteboard, document sharing, video conferencing) but could not adopt mainstream cloud collaboration tools due to strict data sovereignty and security requirements prohibiting exposure to public cloud providers.

Solution Highlights

TEE-Based Collaboration Sessions: All collaboration sessions execute within trusted execution environments providing hardware-level isolation from cloud administrators and external entities. Zero-trust principles where sensitive data processing occurs without plaintext exposure.

End-to-End Encrypted Processing: Data remains encrypted throughout transmission, storage, and processing. TEEs enable computation on encrypted data without requiring decryption, addressing traditional vulnerabilities where data must be accessible for real-time processing.

Customer-Controlled Key Management: All encryption keys generated and controlled exclusively by customer environments. Bring Your Own Keys (BYOK) support with hardware-based security mechanisms ensuring key material never leaves secure client devices.

Results and Impact

The privacy computing implementation enabled long-term contracts with governments and defense organizations previously restricted from cloud collaboration due to compliance constraints. The flexible deployment model supporting on-premises and customer cloud environments enables organizations to choose data residency aligned with regulatory requirements, including specialized deployments for aerospace, defense, and public sector customers requiring the highest security standards.

3.5 AI-Powered Software Development Process Intelligence Platform

Best practice industry: DevOps Analytics + AI + SaaS

Target applicable enterprises: Software product companies, technology startups with competitive IP, gaming studios, autonomous vehicle developers, semiconductor design firms, aerospace software teams

Challenge

Software development organizations needed AI-powered insights into development processes for productivity optimization and risk prediction, but could not expose highly sensitive intellectual property including source code, development workflows, and proprietary methodologies to traditional cloud analytics platforms, creating unacceptable IP risks.

Solution Highlights

TEE-Protected Code Analysis: Development data including code repositories, CI/CD pipelines, and communication patterns analyzed within confidential computing enclaves maintaining complete IP confidentiality. Source code remains encrypted even during sophisticated NLP and ML analysis.

Federated Learning Across Customer Environments: Privacy-preserving federated learning protocols enable AI model training across multiple customer environments without centralizing sensitive development data. Each customer maintains cryptographic control over intellectual property while benefiting from shared model improvements.

Confidential Natural Language Processing: Advanced NLP models analyze documentation and communication content within TEEs providing strict confidentiality guarantees. Differential privacy techniques implemented within TEEs provide additional protection against inference attacks.

Results and Impact

The privacy-preserving approach enabled the platform to serve customers in highly competitive industries who previously could not adopt cloud-based development analytics due to intellectual property protection requirements. AI model accuracy improved through federated learning across diverse customer environments while maintaining customer confidence in data protection. Revenue growth from high-security customers exceeded projections, with many organizations expanding usage across additional development teams following successful initial deployments that demonstrated both analytical value and comprehensive IP protection.

The platform became a competitive differentiator for software development organizations seeking to optimize processes without compromising confidentiality, proving that privacy computing enables new business models in markets where traditional cloud analytics would be impossible due to intellectual property concerns.

4. Technical Deep Dive: Privacy Computing Technologies

Privacy computing enables organizations to maintain cryptographic control over data even when processing occurs on third-party infrastructure, addressing regulatory requirements while enabling cloud-scale resources.

Confidential computing represents a fundamental paradigm shift that extends encryption protection to data during processing—addressing the last remaining vulnerability in traditional security models. While conventional approaches protect data at rest and in transit, they require decryption during computation, creating exposure windows that privacy computing eliminates.

Hardware-Based Security Foundation:

Modern processors from Intel (SGX, TDX), AMD (SEV), and ARM (TrustZone) provide trusted execution environments (TEEs) that create hardware-enforced isolation boundaries. These processors deliver:

- Cryptographic guarantees independent of software security measures

- Remote attestation capabilities enabling verification of computing environment integrity

- Zero-trust architectures where security derives from verifiable technical properties rather than policy promises

Data Sovereign Architecture

Privacy computing enables organizations to maintain cryptographic control over data even when processing occurs on third-party infrastructure. This capability directly addresses regulatory requirements for data sovereignty while enabling access to cloud-scale computing resources—transforming the traditional trade-off between compliance and operational efficiency into a competitive advantage.

Data Clean Room Implementation

Data clean rooms enable privacy-preserving collaboration where multiple organizations analyze combined datasets without exposing underlying data to participants. Modern implementations use trusted execution environments for mathematical isolation, with computation in hardware-protected enclaves that prevent data exfiltration while enabling joint analysis. Advanced techniques including differential privacy and k-anonymity provide additional statistical privacy guarantees against inference attacks, transforming competitive data sharing from trust-based arrangements into cryptographically verified processes that unlock cross-organizational insights while maintaining complete data sovereignty.

5. Phala Enterprise Solution: Bridging Technical Innovation and Business Value

5.1 Comprehensive Security Architecture

Phala's enterprise-grade confidential computing platform addresses four critical security gaps that traditional cloud environments cannot solve through integrated security pillars:

1. Comprehensive Trust Measurement: Creates an unbroken verification chain from hardware microcode through BIOS, operating system, runtime environment, to application binaries—providing end-to-end software supply chain verification comparable to Apple's Private Cloud Compute.

2. Zero-Trust Network Architecture: Implements transparent end-to-end encryption for all communications with unique cryptographic keys per workload, preventing network compromise cascades while maintaining operational simplicity.

3. Hardware-Level Data Protection: Maintains encryption throughout the entire data lifecycle, including during computation within TEE environments, addressing the fundamental weakness of in-memory data exposure that enables devastating breaches.

4. Multi-Party Verification System: Phala's breakthrough approach implements enterprise consortium networks for immutable security attestation verification. This creates independently auditable proof of system integrity that satisfies the most stringent compliance requirements—enabling organizations to demonstrate rather than merely claim their privacy protection capabilities to regulators, auditors, and enterprise customers.

5.2 Enterprise Integration and Deployment Strategy

Seamless Operational Integration:

- Developer Experience: Standard Kubernetes workflows remain unchanged, minimizing learning curves and migration costs

- Two-Phase Deployment Model: Configuration phase for infrastructure setup, production phase allowing only verified business workloads

- Enterprise-Grade Compatibility: Full integration with existing CI/CD pipelines, monitoring systems, and security tools

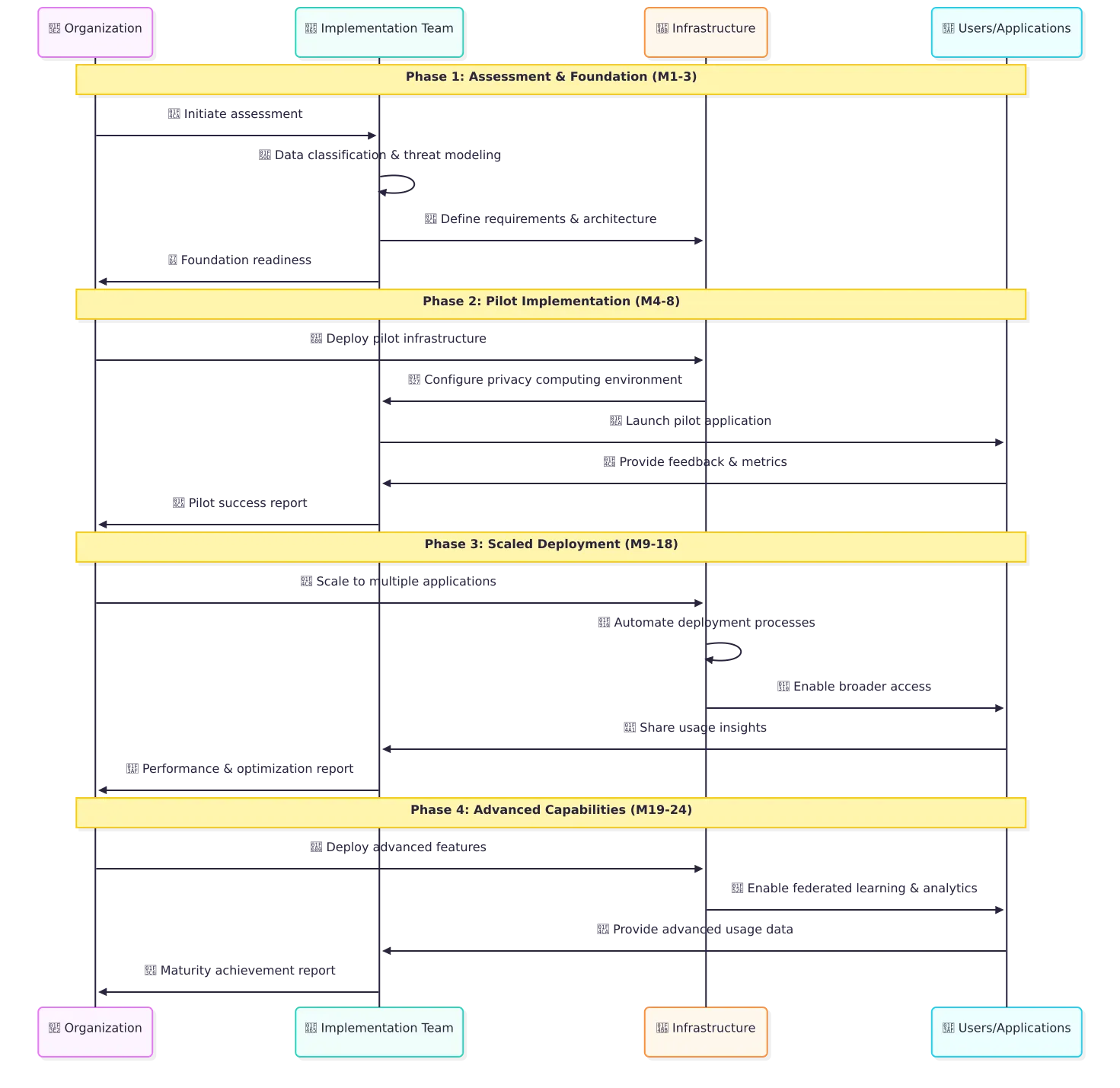

Phased Implementation Roadmap:

Phase 1: Assessment & Foundation (Months 1-3)

- Comprehensive data classification and threat modeling

- Technology selection aligned with business objectives

- Pilot project identification and governance framework establishment

Phase 2: Pilot Implementation (Months 4-8)

- Limited scope deployment with performance baseline establishment

- Security policy development and testing

- Lessons learned documentation and optimization planning

Phase 3: Scaled Deployment (Months 9-18)

- Extension across additional applications and use cases

- Process automation and staff training programs

- Integration with enterprise IT service management systems

Phase 4: Advanced Capabilities (Months 19-24)

- Federated learning and advanced analytics deployment

- Cross-cloud verification framework implementation

- Strategic planning for ecosystem-wide privacy computing adoption

6. Strategic Implementation and Competitive Transformation

6.1 Business Value Across Stakeholder Categories

Privacy computing fundamentally transforms how organizations approach regulatory compliance and market positioning by replacing policy-based assurances with mathematical guarantees. This creates unprecedented competitive advantages across three critical stakeholder categories:

- Regulatory Compliance: Cryptographic verification satisfying GDPR, CCPA, HIPAA, and emerging AI governance requirements through technical demonstration rather than policy documentation

- Secure Multi-Party Collaboration: Enable cross-organizational data sharing without compromising data sovereignty

- Risk Mitigation: Hardware-level isolation eliminates insider threats while immutable verification logs provide audit readiness

Cloud Provider Differentiation:

- Verifiable Security Guarantees: Demonstrate rather than merely assert security capabilities through verification

- Competitive Advantage: Convert hardware-level security into measurable business value propositions

- Market Expansion: Enable new use cases requiring absolute data confidentiality in regulated industries

AI+SaaS Provider Advantages:

- Enhanced Security Posture: Cryptographic proof of application and data integrity addressing enterprise adoption barriers

- Cross-Cloud Portability: Consistent security guarantees across different environments and jurisdictions

- Zero-Trust Architecture: Enable secure operation in untrusted environments while maintaining full functionality

6.2 Strategic Implementation Framework for Competitive Advantage

Immediate Market Opportunities:

The convergence of regulatory evolution, customer expectations, and competitive dynamics creates unprecedented opportunities for organizations implementing privacy computing capabilities. Early adopters are capturing:

- Premium Market Access: High-value customer segments requiring mathematical security guarantees, particularly in government, healthcare, and financial services where privacy computing provides significant competitive differentiation

- First-Mover Advantages: Privacy-enabled organizations are securing long-term customer relationships and premium pricing through demonstrated security capabilities that traditional approaches cannot match

- Regulatory Leadership: Proactive compliance positioning as requirements continue evolving, with privacy computing establishing organizations as industry leaders in data protection standards

Investment Prioritization Framework:

- High-Impact Use Cases: Focus on applications with clear business benefits and regulatory requirements

- Partnership Strategy: Leverage specialized expertise while building internal capabilities

- Skills Development: Critical investment determining long-term success and operational sustainability

6.3 Call to Action: Seizing the Privacy Computing Advantage

Privacy computing represents both a fundamental market opportunity and competitive necessity. The evidence demonstrates that organizations successfully implementing privacy computing capabilities will capture high-value markets and partnership opportunities that define tomorrow's AI economy.

Next Steps for Organizational Leaders:

- Conduct Comprehensive Assessment: Evaluate current privacy posture against regulatory requirements and competitive positioning needs

- Engage Technology Partners: Understand available options and develop preliminary implementation plans focused on business requirements

- Begin Skills Development: Invest in internal capabilities for privacy computing implementation and operations

The Future Competitive Landscape:

The future of AI+SaaS applications will be defined by organizations that successfully balance innovation with privacy protection. Privacy computing technologies provide the foundation for this balance, enabling continued growth and innovation while meeting the highest standards of data protection.

Organizations that act decisively to implement privacy computing capabilities today will be best positioned for success in the evolving digital economy—transforming regulatory compliance from a cost burden into a competitive advantage that opens new markets, enables premium pricing, and builds unassailable customer trust through mathematical guarantees rather than policy promises.

About Phala Network: Leading provider of privacy computing solutions enabling organizations to harness AI power while maintaining complete data sovereignty and regulatory compliance. Contact our enterprise solutions team to begin your privacy computing transformation and capture the competitive advantages that mathematical privacy protection delivers.