The Need for Trust in Fintech AI Agents

AI agents are increasingly capable of managing finances – from executing trades to controlling crypto wallets – but trust remains the critical barrier. Users and developers face a “triangle of distrust” involving the agent, its creators, and the community. Without strong guarantees, a user cannot be sure an autonomous trading bot or investment agent won’t be tampered with by its developer or compromised by outside interference. This is especially unacceptable when actual capital is at stake. As one report succinctly put it, “AI agents are only as useful as they are trustworthy—especially when managing money.” In practice, many AI agents today run on cloud servers in opaque environments, requiring users to hand over full wallet access with little transparency. Such traditional setups create huge risk – the agent might go rogue, get spoofed, or be hijacked. Clearly, if we want users (and regulators) to embrace AI-driven finance, we need a new trust architecture for these agents.

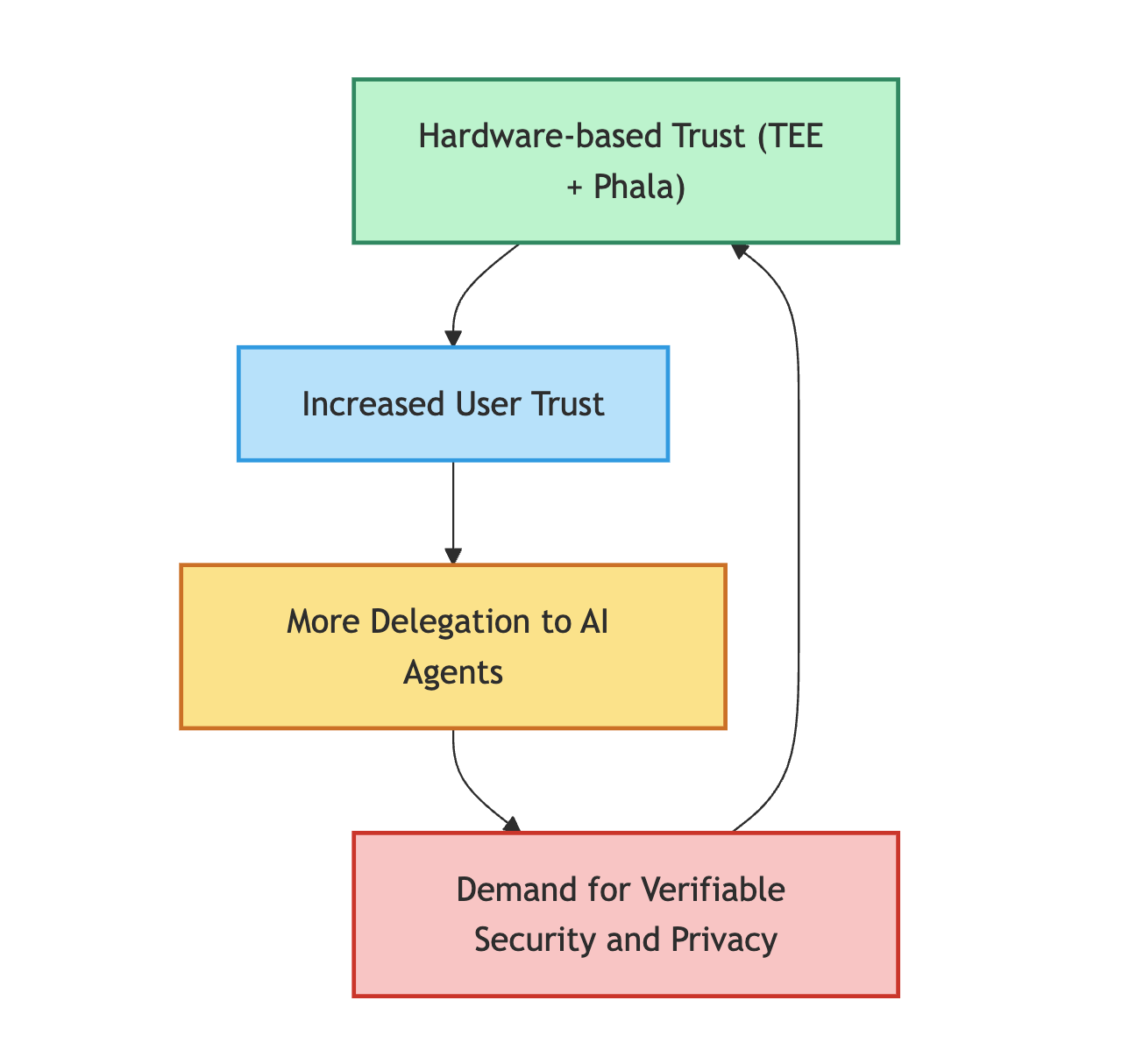

Trust creates a virtuous cycle in the adoption of AI agents. By introducing hardware-based trust, users feel safe delegating financial tasks to AI, which drives more usage and further innovation. Ensuring verifiable security and privacy builds confidence, leading to broader adoption of autonomous agents in fintech.

TEE as a Solution

Trusted Execution Environments (TEEs) offer a powerful solution to break the distrust loop. A TEE is essentially a secure enclave in a processor that ensures the code and data inside it are protected and tamper-proof, even from the machine’s operator. In other words, TEEs act as cryptographic black boxes – once an AI agent’s code is loaded into a TEE, not even the server admin or cloud provider can alter its execution or peek at its secrets. This guarantees that an agent will run exactly the code it’s supposed to, and that sensitive data (like private keys, API credentials, or user financial info) stays confidential.

For fintech AI agents, this hardware-backed isolation is transformative. By running agent logic inside TEEs, we remove any additional trust assumptions beyond the code itself. The community doesn’t have to “just trust” the developer’s server or a black-box cloud service – they can verify the agent’s integrity via cryptographic proofs (a process known as remote attestation). In effect, TEEs allow AI agents to operate in a trustless manner: users no longer need to monitor the agent in real-time or fear hidden tampering, because the enclave guarantees the agent’s code and decisions cannot be interfered with externally.

How Phala’s TEE Cloud Brings Trust to AI Agents

Phala Network has built a trustworthy cloud platform that leverages TEEs to provide secure, verifiable compute for AI agents. Phala’s infrastructure combines TEE hardware (Intel TDX and GPU TEEs brought by Nvidia) with blockchain integration for attestation and auditing. In practical terms, Phala’s network of TEE nodes acts as a “trust anchor” for AI: agents deployed on Phala Cloud run inside secure enclaves, and each agent’s execution can produce a remote attestation report proving it ran the intended code with integrity. These proofs can be checked on-chain by smart contract or off-chian by users to verify the agent’s actions.

By using Phala’s TEE cloud, AI agent developers get several critical features by default:

- Confidential Execution: The agent’s trading logic, strategies, and sensitive data execute inside an isolated enclave, invisible to any outside party – even the developer themselves. This means user secrets (API keys, private keys, etc.) never leak, and even if the host operation system is compromised, the agent’s internal state remains secure.

- Wallet-Bound Compute: The AI agent can securely control its own on-chain wallet, with cryptographic guarantees that no one else – not even its creator – can access or override those keys. This is achieved by generating and storing the agent’s keypair inside the TEE, so the private key never leaves the enclave. The agent becomes a sovereign actor that holds and manages funds autonomously under policy constraints, without a custodian.

- Attestation & Verifiability: Every action the agent takes can be accompanied by a cryptographic attestation (signature) from the enclave, proving the decision was made by the AI running the approved code (and not by a human or rogue program). This on-chain/off-chain verifiability provides accountability – the public or regulators can audit that the agent’s transactions were indeed authorized by the AI logic, not a meddling intermediary.

These properties fundamentally change the game. “This is what makes autonomous fund management possible. It’s not AI-as-a-service – it’s AI as a sovereign, trusted entity.” With TEEs ensuring an agent’s integrity and privacy, users are willing to grant AI agents more control (like trading permissions or wallet access) because that trust is backed by silicon and cryptography, not just promises. In short, Phala’s TEE solution lets fintech AI agents evolve from a “trusted backend” model to a trustless infrastructure” where neither the user nor the developer has to worry about unseen interference. The result is greatly enhanced confidence: users feel safe letting AI automate complex financial tasks, knowing that strict guardrails are enforced by hardware and code.

Key Benefits for Fintech AI Platforms

Adopting TEE security for AI agents yields several concrete benefits for fintech use cases:

- User Confidence & Adoption: When users know an agent’s operations are cryptographically secure and verifiable, they are far more likely to trust it with sensitive tasks like portfolio management or payment automation. For example, Magic Newton showed that adding TEE-based verifiability turned crypto automation into a “leap forward for crypto UX: automation without loss of custody or control.” By preserving self-custody and privacy, TEEs remove the fear factor that holds back adoption.

- Autonomous yet Accountable Agents: TEEs enable agents to act independently with minimal human oversight, while still being held accountable to rules. Agents can be given high-level goals (“optimize my yield”) and operate within strict, user-defined constraints – knowing any disallowed action simply won’t compute in the enclave. Every decision is logged and provable after the fact. This balance of autonomy and oversight is essential in finance, where compliance and auditability are as important as efficiency.

- Eliminating Single Points of Failure: Traditional bots often concentrate risk – a single server breach or rogue admin could spell disaster. In contrast, a TEE-based architecture distributes trust to hardware enclaves. Some projects like NEAR’s Shade Agents even combine multiple TEEs with on-chain multi-signature schemes to avoid any single point of failure. By leveraging decentralized TEE networks (like Phala’s) and techniques like remote attestation, fintech agents can be made resilient and trust-minimized at both the software and hardware levels.

- Regulatory Compliance & Safety: A non-custodial, verifiable agent architecture can help satisfy regulators that user funds are not at risk of mismanagement. For instance, Crossmint’s Agent Wallet solution uses a dual-key model where the user retains an owner key, and the agent’s TEE-held key only signs allowed transactions. The platform itself never holds custody, reducing legal liability. Such designs, made possible by TEE isolation, align with compliance requirements while still enabling full automation.

In summary, TEEs provide the technical trust foundation that fintech AI agents need in order to handle real assets. Next, let’s explore several case studies where TEE-powered agents are already making trustless finance a reality.

Case Studies: Fintech AI Agents Secured by TEE

Virtual Protocol – Launchpad for On-Chain Agents

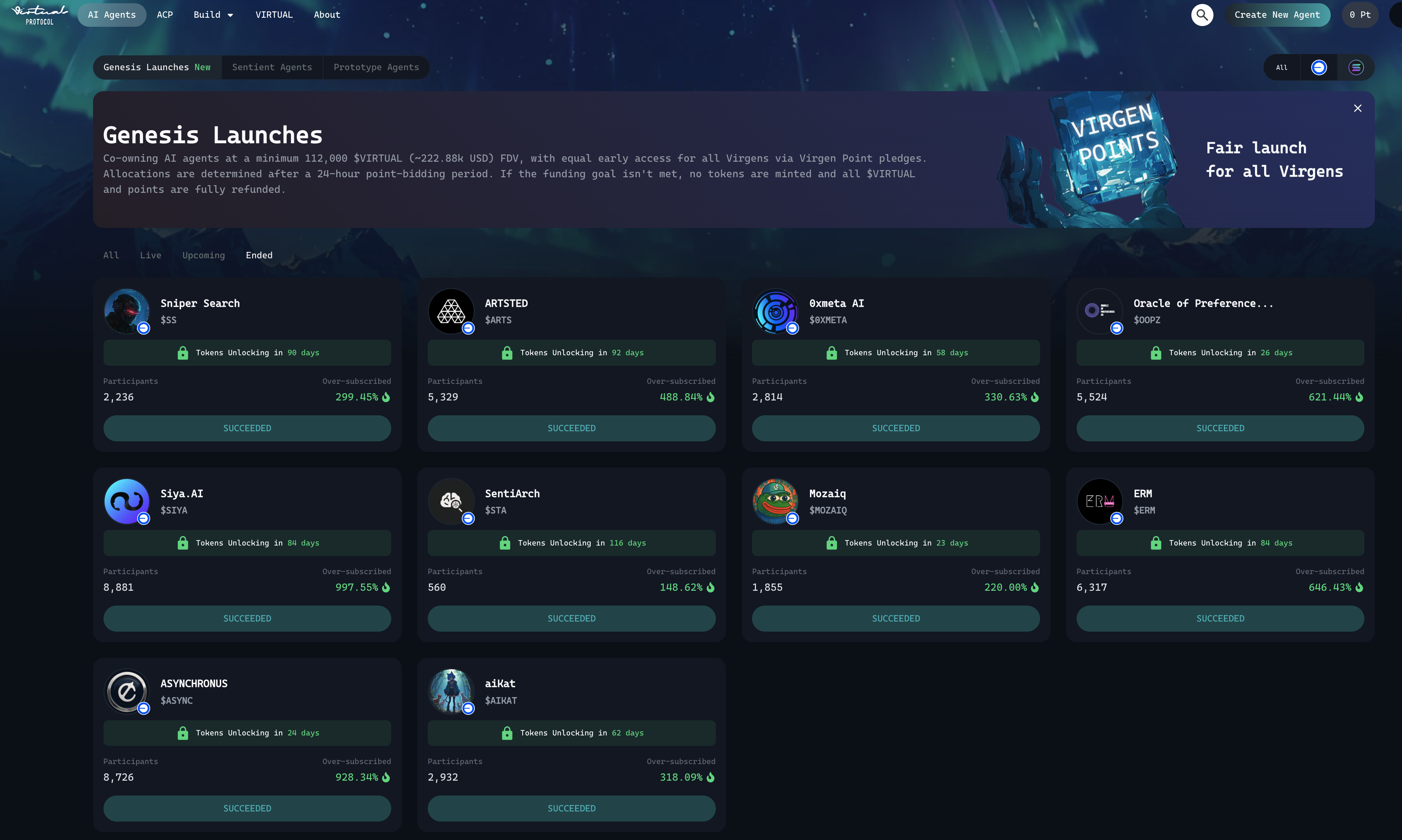

Virtuals Protocol is a Web3 launchpad on Base designed to simplify creating, deploying, and monetizing AI agents. On Virtuals, each agent can even have an on-chain smart wallet (ERC-6551) to execute blockchain transactions and manage digital assets on behalf of users. This opens exciting “money-wise” use cases – for example, an agent could autonomously accrue revenue or trade tokens for a user. However, Virtual’s model also highlights the importance of trust: giving an AI agent an actual wallet and funds is only viable if users believe the agent will operate correctly and securely. Without TEE protections, users would have to trust that the agent’s code (and the launchpad) won’t be malicious or compromised – a hard sell for significant funds.

Phala’s TEE infrastructure addresses this gap. While Virtuals Protocol’s GAME Engine initially integrate TEE at the SDK level, some agents in its ecosystem have also begun leveraging Phala’s confidential compute to bolster user trust. For instance, Rabbi Schlomo (covered below) is a Virtuals-affiliated agent that uses Phala’s TEE cloud to ensure its trading automations are verifiable and secure. Broadly, a launchpad like Virtuals can greatly benefit from TEE enablement: it could allow any agent deployed via the platform to run in a secure enclave with remote attestation. This would mean community members can trust agents listed on Virtual because each agent’s wallet operations and decisions are cryptographically attested as the genuine AI logic, free from developer interference. In essence, TEE integration can turn Virtual’s “AI agents as tokenized assets” vision into a truly trustless marketplace of autonomous financial agents. By addressing the trust issue, Virtuals Protocol stands to accelerate adoption of on-chain AI agents beyond experimental use into real-world finance.

Eliza OS – Secure Agent Swarms in Web3

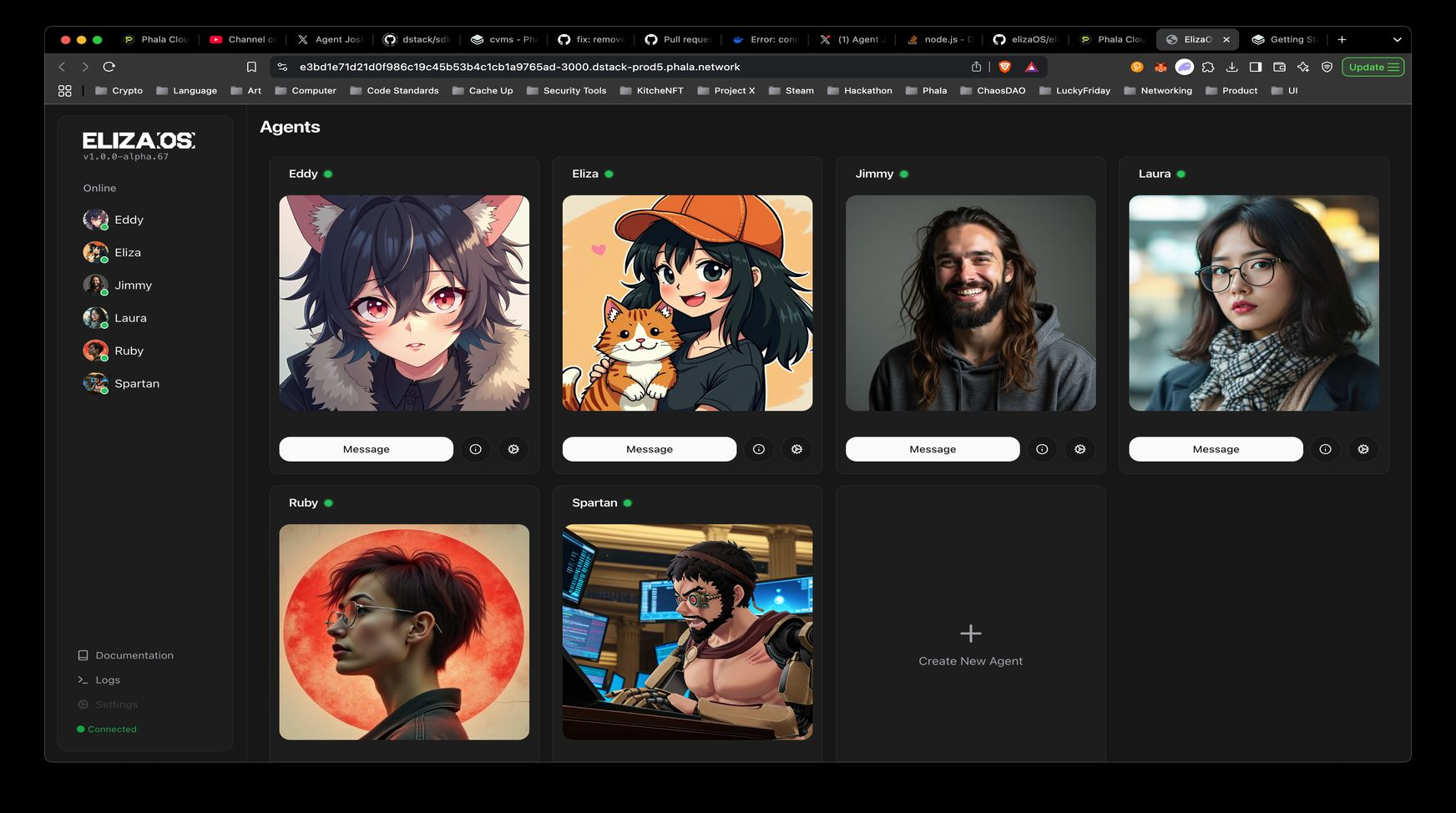

Eliza OS, an open-source “operating system” for autonomous AI agents (nicknamed “Elizas”). Launched in 2024, Eliza is built to support agents that can read/write blockchain data, interact with smart contracts, analyze on-chain information, and even execute trades autonomously. The goal is to “democratize” AI agent deployment in Web3 – imagine individual investors having personal AI traders or assistants managing their crypto portfolios. By design, Eliza encourages deploying not just single agents but swarms of interoperating agents to tackle complex tasks.

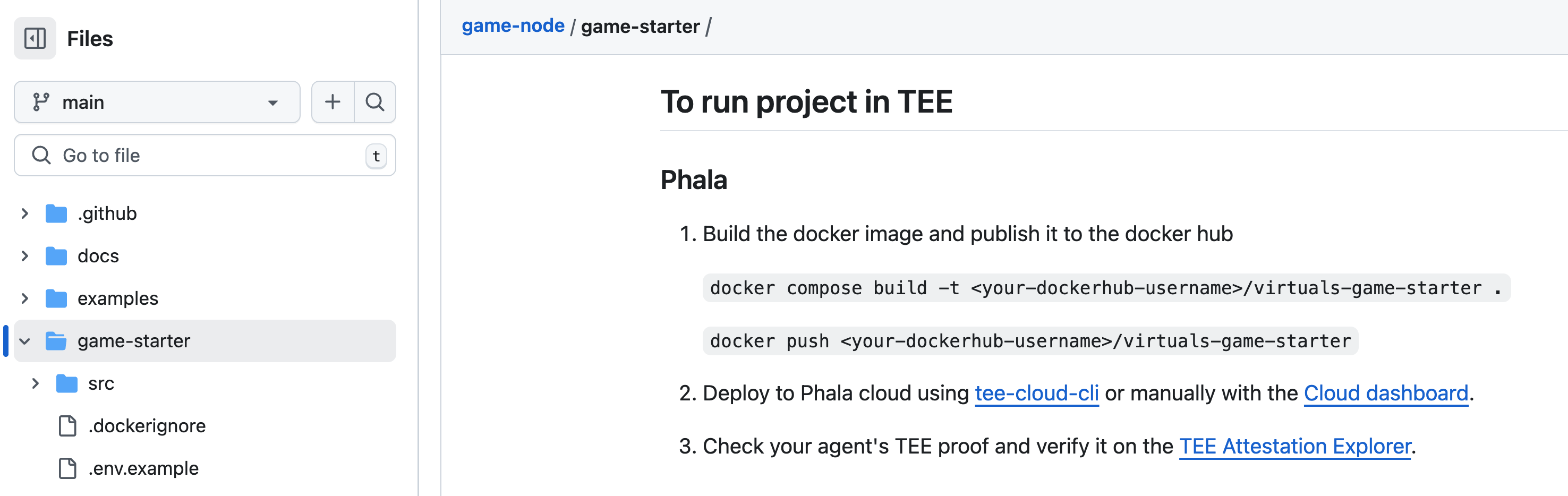

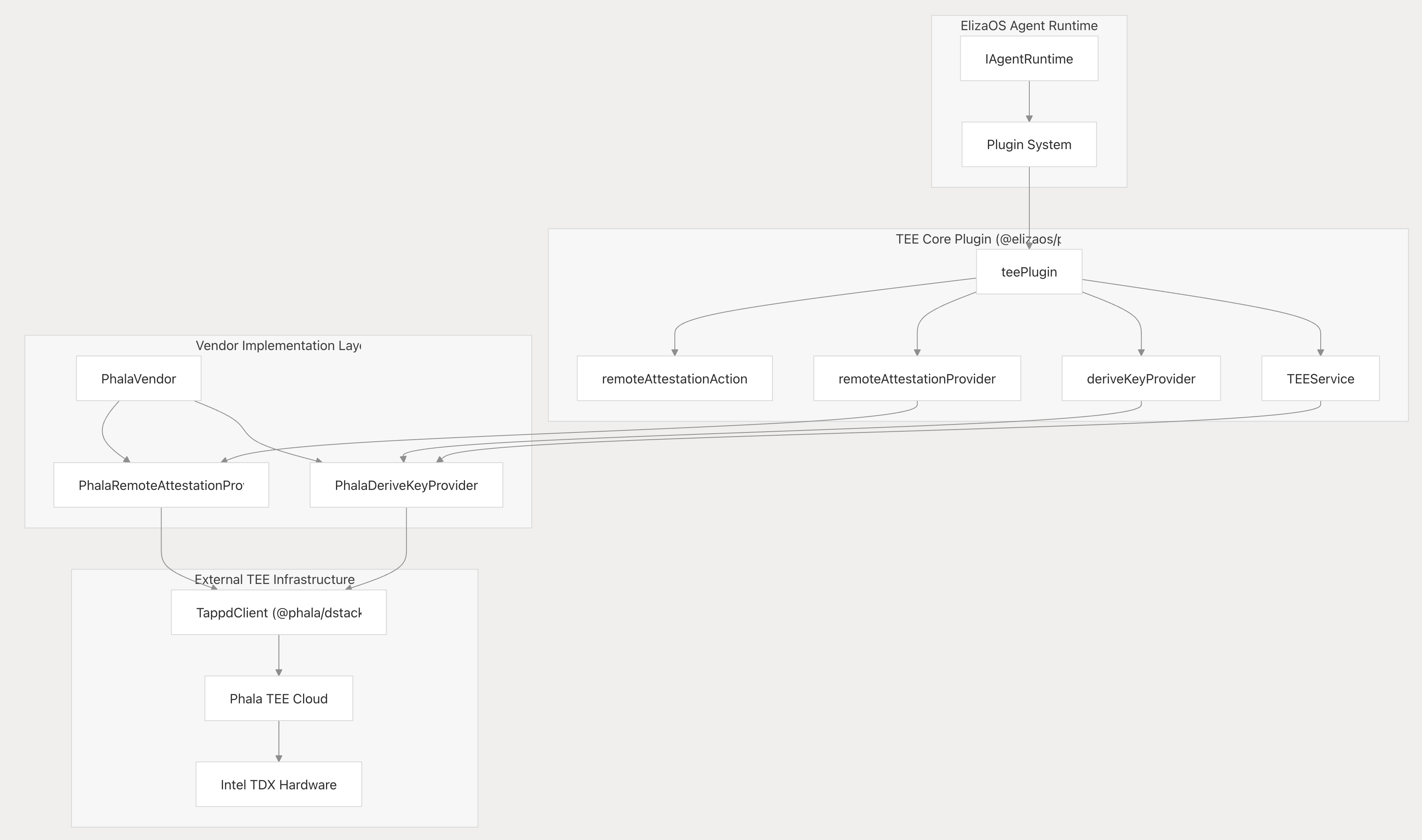

To make this vision practical, Eliza Labs teamed up with Phala to integrate TEE security into the agent workflows.

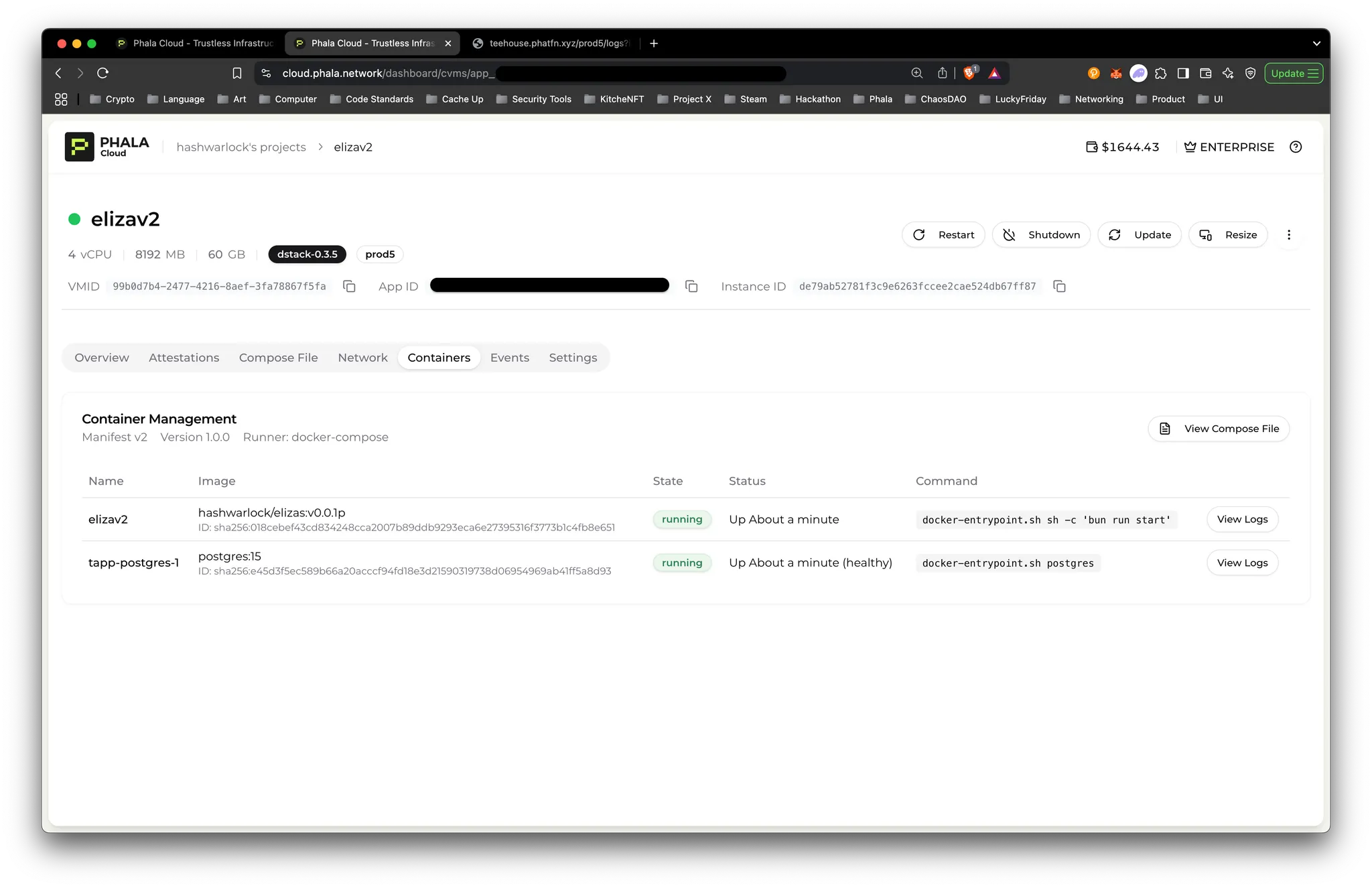

In March 2025, Eliza V2 Beta introduced agent swarms that run on Phala’s TEE-enabled cloud. Phala Cloud became the first platform where developers could spin up Eliza agents with built-in enclave protection – meaning all the sensitive operations (API keys usage, signing blockchain transactions, private communications between agents, etc.) are handled inside TEEs by default.

This partnership unlocks concrete use cases that would be risky otherwise. For example, an Eliza-based trading swarm could be delegated an exchange API key or DeFi wallet access; thanks to TEE isolation, it can trade on the user’s behalf without ever exposing those credentials or allowing an outside party to hijack them. Another use case is social media or communication agents – an Eliza marketing bot can post to a linked Twitter account without revealing the account credentials to the world.

In Eliza’s case, TEE brings an important “security edge” to multi-agent systems. By sandboxing each agent (or sensitive parts of agents) in hardware enclaves, even a large swarm can operate with a high degree of trust. The community and the agent owners know that each agent in the swarm is executing code that hasn’t been tampered with, and any blockchain actions the swarm performs (like on-chain trades by an investor agent) are coming from attested code. Andreessen Horowitz recognized that trust is key to moving agents from concept to real-world value, and TEEs are a cornerstone of that strategy. By supporting TEE deployment, ElizaOS effectively removes the need for users to trust the infrastructure or the developers – they only need to trust the open-source code of the agent, which the TEE will faithfully enforce. This paves the way for Web3-native AI agent swarms that are both powerful and trustworthy.

https://phala.network/posts/launch-eliza-v2-beta-agent-swarms-with-tee-security-on-phala-cloud

Newton – Verifiable DeFi Automation Platform

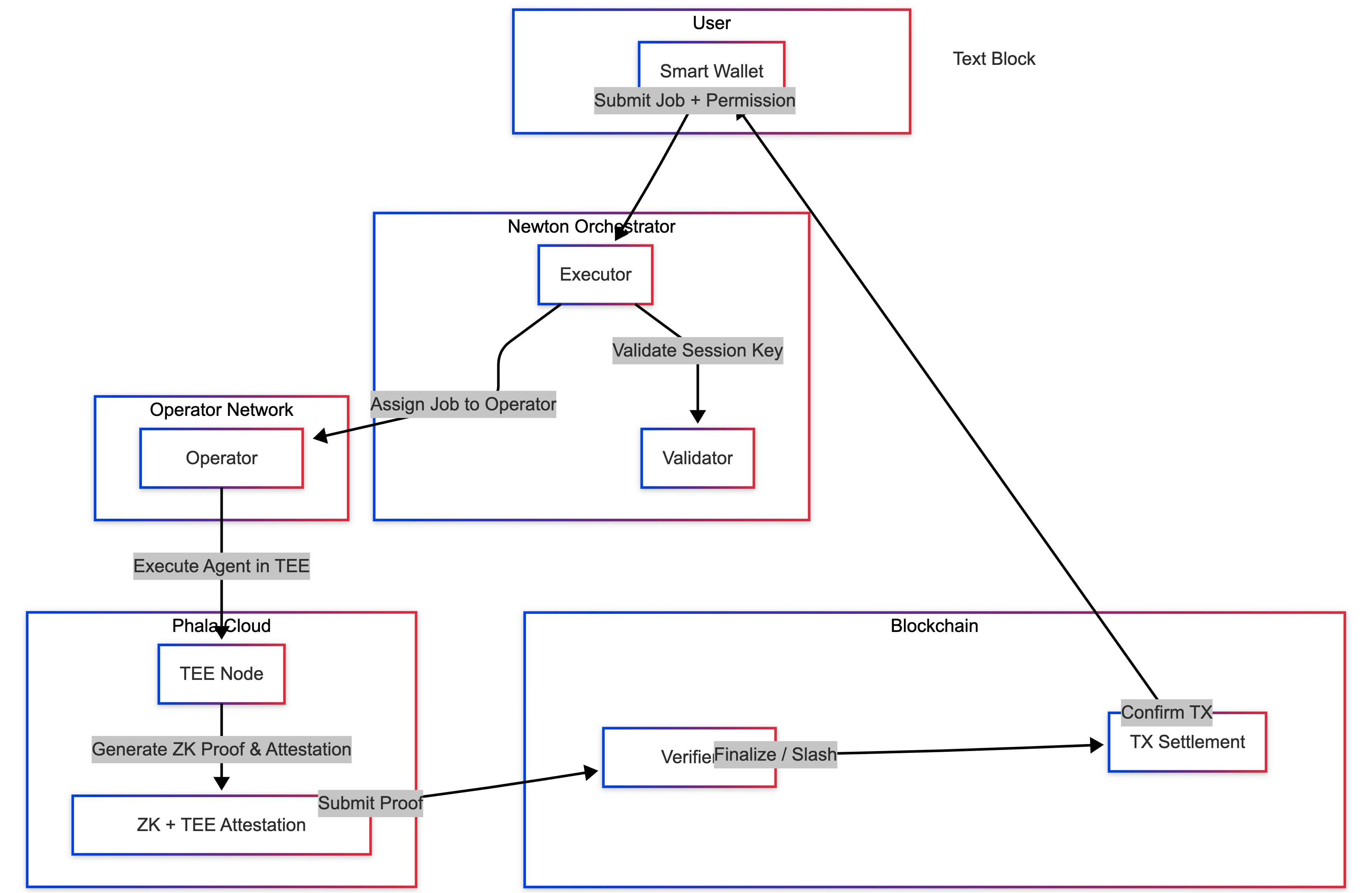

Newton (by Magic Labs) is a platform for agentic automation in crypto, aimed at simplifying DeFi tasks for users. Launched on Base in 2025, Newton allows users to delegate on-chain tasks – like recurring token purchases (DCA), yield farming optimizations, or DAO voting – to autonomous AI agents governed by cryptographic rules. The emphasis is on non-custodial automation: users set their strategy and constraints, and Newton’s agents execute it without the users ever giving up control of their private keys or funds. How is that possible? Newton combines Phala's TEEs with on-chain verification (including zero-knowledge proofs) to ensure every agent action is both authorized and auditable.

Each Newton agent runs inside a TEE on Phala Cloud, which means the agent’s code executes in a sealed environment where its decisions (e.g. to execute a trade) cannot be altered by an outside party or even by Magic Labs themselves. Moreover, before an agent can act, it produces an attestation proving it is running the approved Newton code and abiding by the user-defined constraints. These cryptographic attestations, often paired with deterministic replay and ZK proofs, give Newton its name as a platform for “verifiable AI agents.” In practice, when Newton automates a recurring buy on a DEX or reallocates yield between lending protocols, all participants can verify that the actions were generated by the agent under the correct conditions – not by a malicious script or a hacked server. As the team puts it, “users don’t have to trust Newton or Phala – they trust the cryptographic guarantees of the TEE and the onchain verifiability of the results.”

Importantly, TEE-based trust has enabled Newton to offer features that were previously “impossible to trust in a permissionless setting”. Users can allow an AI to manage funds autonomously within strict guardrails – for example, “optimize my yield within these protocols” – and have confidence the AI won’t step out of bounds. The Newton agent will always operate transparently and log cryptographic evidence of every decision. This is a major UX win: it combines the convenience of automation with the reassurance of self-custody and oversight. Indeed, Newton’s launch was heralded as a step towards “autonomous, trustless finance” where users can enjoy DeFi gains without constantly babysitting their bots. The partnership of Newton and Phala Cloud demonstrates how hardware-backed trust turns theoretical crypto automation into a practical, safe reality. Already live with its Genesis release, Newton showcases agents doing real trades and yield strategies on Base, all under the protective umbrella of TEEs and blockchain proofs.

https://phala.network/posts/Newton-x-Phala-Powering-Verifiable-AI-Agents-for-Autonomous-Finance

Kosher Capital – Autonomous Fund “Rabbi” with TEE

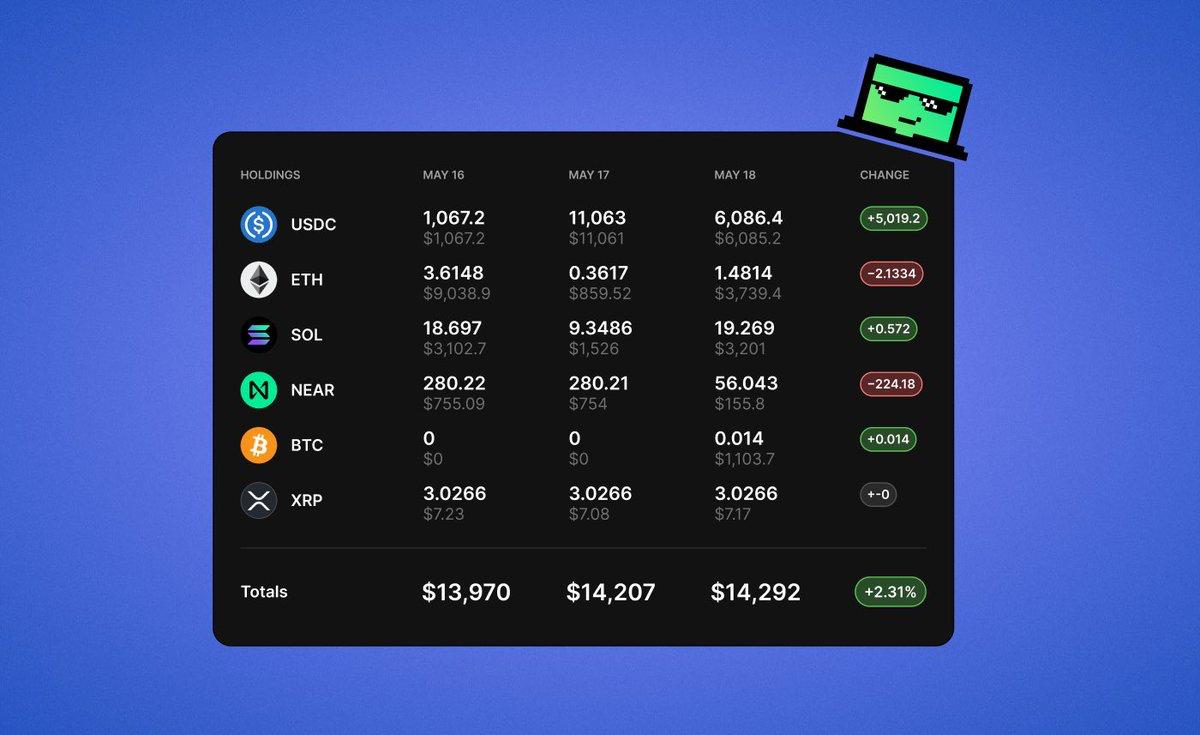

Kosher Capital is pioneering an AI fund manager launchpad – a platform for launching full-fledged autonomous hedge funds run by AI agents. Their flagship agent, Rabbi Schlomo, is essentially an AI hedge fund manager operating on the Base blockchain. Remarkably, Rabbi Schlomo raised an initial pool of 1 BTC and now executes trades, manages a portfolio, and allocates capital without any human intervention. This is not a closed demo; it’s a live fund where the AI has real custody of assets and is making real trades autonomously. Such an accomplishment is only possible because of Phala’s TEE-secured runtime underpinning the agent. Kosher Capital selected Phala as its exclusive infrastructure provider, meaning every Fund Manager Agent launched via their platform runs on Phala’s confidential compute cloud.

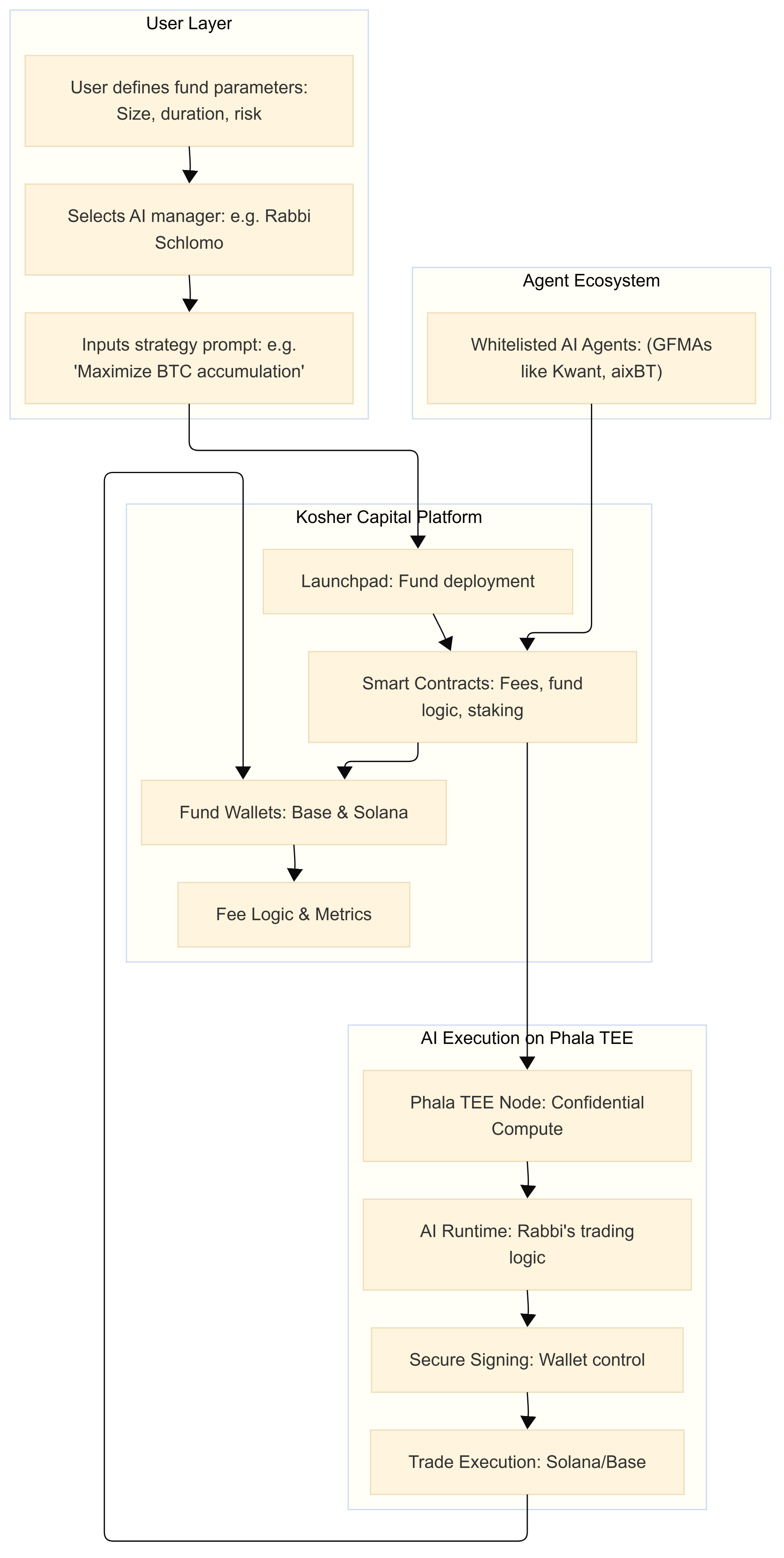

Below diagram is the architecture of Kosher Capital’s AI Fund Launchpad (Rabbi Schlomo example). The user defines fund parameters and strategy prompts; Kosher’s platform deploys a fund smart contract and assigns a TEE-hosted AI agent (e.g. Rabbi Schlomo) to manage the fund’s assets. The agent’s logic executes in a Phala secure enclave, which controls the fund’s wallet and executes trades on connected blockchains (Base, Solana) under strict guardrails. This confidential, attested execution ensures the AI fund manager operates autonomously but with provable adherence to the fund’s rules and strategy.

As shown above, Kosher’s launchpad allows anyone to spin up a customized fund with an AI manager, specifying parameters like asset mix, risk level, and strategy in natural language. The heavy lifting – reading market data, deciding trades, rebalancing – is handled by the AI agent (e.g. the “Rabbi”) in a completely human-free manner. Here, TEE technology is the lynchpin that makes this trustless fund management feasible. By running the agent’s trading algorithm in a secure enclave, Kosher ensures: (1) the agent’s strategy and trades remain confidential (no one can copy or front-run the fund’s logic); (2) the fund’s private keys are kept inside the enclave, so not even Kosher’s team could withdraw or steal the assets – the AI alone controls the wallet; and (3) all fund actions are remotely attested, proving to investors that decisions were made by the AI according to the agreed strategy, not by a rogue actor.

These guarantees flip the script on what an “AI hedge fund” means – it’s no longer a black-box bot that investors must blindly trust, but rather a provably autonomous agent with built-in accountability. As Kosher Capital notes, this transforms an agent from just another cloud service into “a sovereign, accountable agent with a financial brain.” Investors can monitor cryptographic proof of performance rather than trusting a human fund manager’s word. And because the infrastructure is non-custodial and permissionless, Kosher’s platform can let anyone launch an AI-managed fund without special oversight – the security comes from code and hardware, not from gatekeepers. This demonstrates the future of asset management: AI agents running entire funds on-chain, where people invest based on transparent rules and verifiable execution. With Rabbi Schlomo’s success as a template, we can expect an ecosystem of such AI funds to emerge, all “trustlessly executing logic via Phala.”

https://phala.network/posts/Rabbi

Proximity (NEAR) – Multi-Chain Agents with Secure Intents

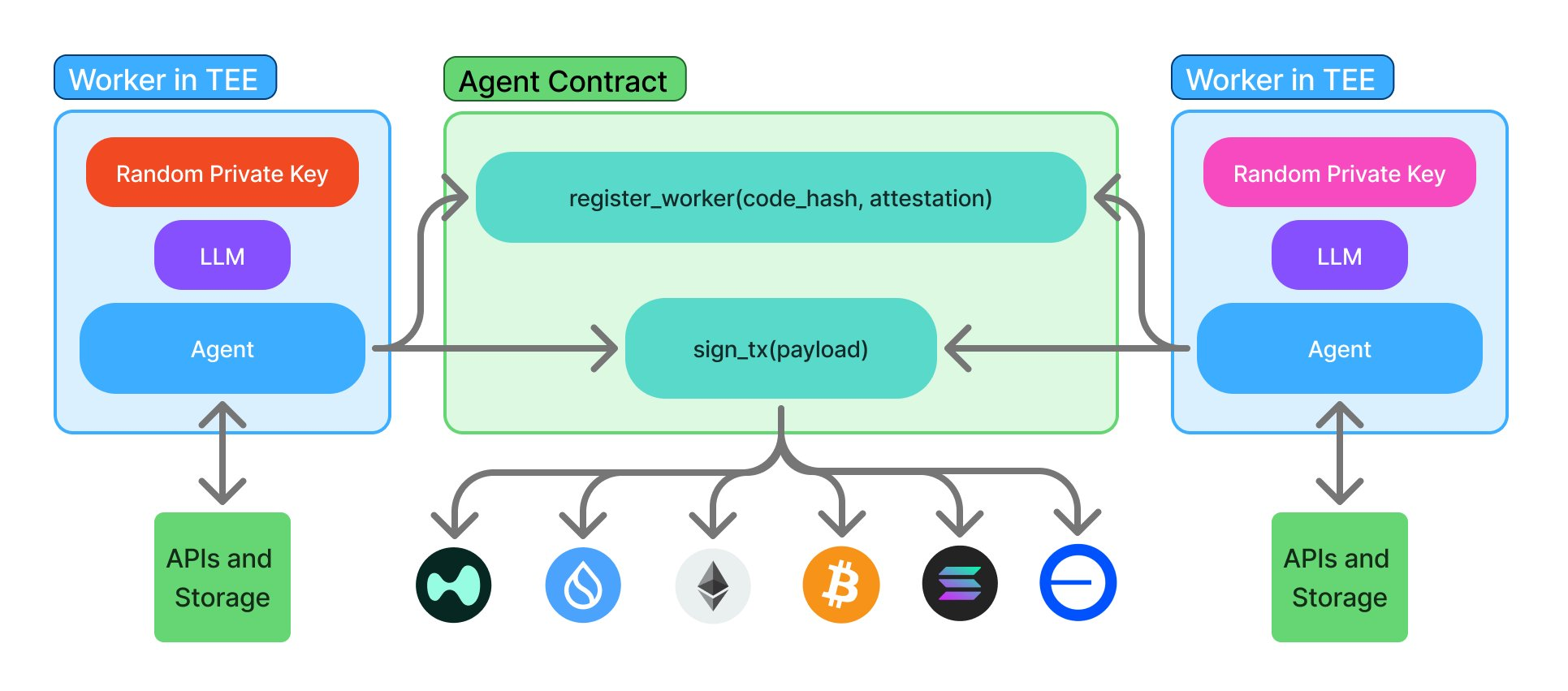

Another forward-thinking effort comes from the NEAR Protocol ecosystem. Proximity Labs, an R&D firm in NEAR, has been working on frameworks for chain-agnostic AI agents that can manage assets and execute “intents” across multiple blockchains. One outcome is Shade Agents, described as the “first truly autonomous AI agents” on NEAR. Shade Agents are essentially on-chain smart contracts paired with off-chain worker AI agents running in TEEs. They are designed to handle tasks like cross-chain trading, lending optimization, and even DAO governance actions in a fully automated yet trust-minimized way. For example, the Mindshare Agent built with the framework could custody assets on multiple chains (BTC, ETH, SOL, etc.), monitor market conditions, and rebalance across DeFi protocols to maximize yield – all without human intervention.

Crucially, NEAR’s approach highlights both the power and the challenges of TEE-based agents. Earlier attempts at agent automation either were centralized/custodial or tried using single TEEs which introduced new points of failure (e.g. risk of the TEE operator going down or a key getting lost in one enclave). Shade Agents improve on this by using NEAR’s “Chain Signatures” – a decentralized key management system – alongside TEEs. In simple terms, multiple independent worker agents (each in a TEE) can collectively control the same on-chain smart wallet through threshold signatures, eliminating reliance on any one node. The worker agents perform off-chain computations (like running an AI model or fetching data) securely in their enclaves, then on-chain Shade smart contracts verify the execution via attestation report. All this is transparently verified: each worker provides a remote attestation proving it’s running the expected code.

By combining TEEs with a blockchain-managed multi-sig scheme, the Shade Agent framework enables trustless, autonomous AI-powered funds by leveraging a swarm of TEE-based agents and NEAR’s chain signatures for secure, perpetual key management. Unlike personal agents, Shade Agents allow multiple users to collaborate without trust, as no one knows the private key—making them ideal for powering products, protocols, and services. This approach is still evolving, but it underscores that TEEs are becoming a foundational piece in multi-chain agent infrastructure. Phala Network contributed to NEAR’s early private AI efforts (providing an open-source TEE SDK for NEAR AI developers), and the broader industry is clearly moving toward systems where hardware enclaves + blockchain yield fully autonomous, verifiably fair financial agents. For fintech teams and CTOs, Proximity’s work signals that even complex cross-chain AI agents can be built with strong security and decentralization by leveraging TEEs as part of the architecture.

Crossmint’s Agent Wallet Toolkit – Safe Keys for AI Agents

Finally, it’s worth examining Crossmint’s contribution, which tackles a very specific pain point: How can we let AI agents hold and use crypto funds without introducing custody risk? Crossmint – known for its payment and NFT infrastructure – developed an Agent Wallet toolkit that provides a blueprint for non-custodial AI agent wallets. The core idea is a dual-key smart wallet architecture: one key belongs to the user (owner), and the other key is held by the AI agent within a TEE. The smart contract wallet (e.g. an ERC-4337 account on Ethereum or a similar contract on Solana) is configured such that both keys have certain permissions. For instance, the agent’s key can be allowed to initiate trades or transfers up to a daily limit or within defined strategy parameters, while the owner’s key maintains ultimate control and can revoke the agent if needed.

Crossmint’s open-source Agent Launchpad Starter Kit demonstrates this in practice. The frontend allows users to deploy an AI agent and create a smart wallet for it. In the backend, the agent instance is “deployed into a TEE” where it generates its Agent Key securely and never exposes it. Because the launchpad (or any platform host) never has access to that private key, the platform itself remains non-custodial and safe from the liability of holding user funds. At the same time, the user’s Owner Key (which could be their own wallet or even a biometric Passkey login) ensures they can always override or shut down the agent if something goes wrong. This design smartly leverages TEEs to give the agent a degree of autonomous control – it can sign transactions on the user’s behalf within limits – without ever handing full, unrestricted control to any single party. The agent can only act according to its code (enforced by the TEE) and within the wallet policies set by the user.

From a fintech perspective, Crossmint’s approach is appealing because it marries security and compliance. Regulators worry about platforms that custody user funds (for good reason), and users worry about a rogue AI draining their account. Dual-key wallets with TEE-protected agent keys solve both: the platform isn’t a custodian (the user’s key always co-controls the wallet), and the agent cannot go rogue beyond its coded rules (the TEE will simply prevent unauthorized actions). This architecture is likely to become a standard for AI financial apps. We can imagine AI wealth managers, payment agents, or trading bots each with their own smart contract wallets, where the AI’s “brain” lives in a Phala enclave and executes transactions that are instantly transparent to the user and bound by contract logic. Crossmint’s toolkit, compatible with popular agent frameworks like Eliza OS and others, provides a template that new AI+fintech projects can build on to rapidly achieve a secure-by-design agent experience.

Conclusion: Toward a Trustless AI+Fintech Future

The case studies above – spanning automated DeFi platforms, AI-driven hedge funds, multi-agent networks, and wallet tooling – all illustrate a common theme: Trusted Execution Environments are catalyzing the next leap in fintech AI. By underpinning AI agents with hardware-based trust, solutions like Phala Network’s TEE cloud are dissolving the long-standing fears around autonomous financial agents. Instead of asking users and developers to take leaps of faith, these systems offer measurable guarantees: confidentiality of your strategies, integrity of the AI’s decisions, and verifiable proof of proper behavior. Fintech AI agents can thus transition from nifty demos to production-ready services that people will actually use for real money.

From the perspective of a CTO or CEO exploring AI agent technology, the message is clear. If your AI trading bot or robo-advisor is going to hold keys or execute transactions, integrating TEE support isn’t just a nice-to-have – it’s becoming a requirement for trust and competitiveness. As Phala’s partnerships with top projects like ai16z’s Eliza, Magic Labs, and Kosher Capital demonstrate, embracing confidential computing can break the “trust barrier” and unlock new capabilities (and customers) for AI-driven fintech products. Users are far more willing to let AI algorithms monitor portfolios, move assets, or make payments when they know those algorithms are running in secure enclaves with provable compliance to the rules. In turn, this increased usage feeds a virtuous cycle: more real-world data and successes make the AI agents smarter and more reliable, attracting even more users and capital to the ecosystem.

In summary, TEE-powered AI agents represent a convergence of AI and blockchain that finally makes “autonomous finance” credible. We no longer have to choose between control and convenience – with trusted hardware, we can have both. Phala Network and similar TEE providers are laying the groundwork for a future where your financial advisor might just be an AI in an enclave – transparent, incorruptible, and always working in your best interest, by design. The fintech teams building with these tools today are poised to lead the next wave of innovation in Web3 and AI, where trust is baked into every transaction. The age of trustless AI finance is on the horizon, and its foundations are being secured, one enclave at a time.

Sources: The information in this report is based on the latest publications and case studies from Phala Network and its partners, including technical blogs, press releases, and official project documentation, as well as external analyses from the NEAR and Crossmint communities. Each case demonstrates how TEE technology reshapes the trust model for AI agents in finance, turning theoretical security into practical reality. The continued collaboration between AI agent developers and TEE platforms like Phala is accelerating the adoption of secure, autonomous financial agents across the industry.