Introduction

Phala and PublicAI are teaming up to tackle trust, privacy, and transparency issues in AI data collection. This case study examines the integration of Phala’s Trusted Execution Environment (TEE) technology with PublicAI’s decentralized data annotation platform. Together, we create a secure and transparent ecosystem for trustworthy data annotation, privacy-preserving data sharing, and full lifecycle AI development—powered by Phala’s TEE capabilities.

The Challenges in AI Training Data Collection and Annotation

The path to high-quality AI models is paved with challenges in data collection and annotation:

- Trust Issues: Data collection and annotation programs are susceptible to tampering and manipulation because of it's owned by centralized entities.

- Privacy Concerns: Users contributing data often face risks of unauthorized access or misuse.

- Transparency and Verifiability: Ensuring that data workflows are transparent and provable remains a difficult task.

These challenges necessitate a robust solution that combines technological innovation with decentralized principles to safeguard trust, privacy, and transparency throughout the AI lifecycle.

Phala: Transforming TEE Infrastructure

Phala is pioneering TEE infrastructure, enabling developers to deploy containerized applications securely in a TEE. Phala Cloud, built on the Dstack SDK, simplifies deployment with a security-first approach.

Key Features of Phala Cloud:

- Secure Deployment: Deploy apps securely in TEE within minutes using Docker Compose.

- Familiar Tools: Developers can use standard tools like Docker Compose for seamless integration.

- Secrets Management: Sensitive data and secrets are safeguarded within the TEE.

- Verifiable Execution: Phala Attestation Service validates program execution via blockchain or browser clients.

PublicAI: Decentralized Multi-Modal Data Collection and Annotation Platform

PublicAI revolutionizes the AI ecosystem by delivering premium, on-demand AI training data while enabling individuals worldwide to monetize their expertise. Leveraging a decentralized network of 800,000+ verified contributors, their platform ensures unparalleled data quality through rigorous skill validation and a stake-slashing mechanism.

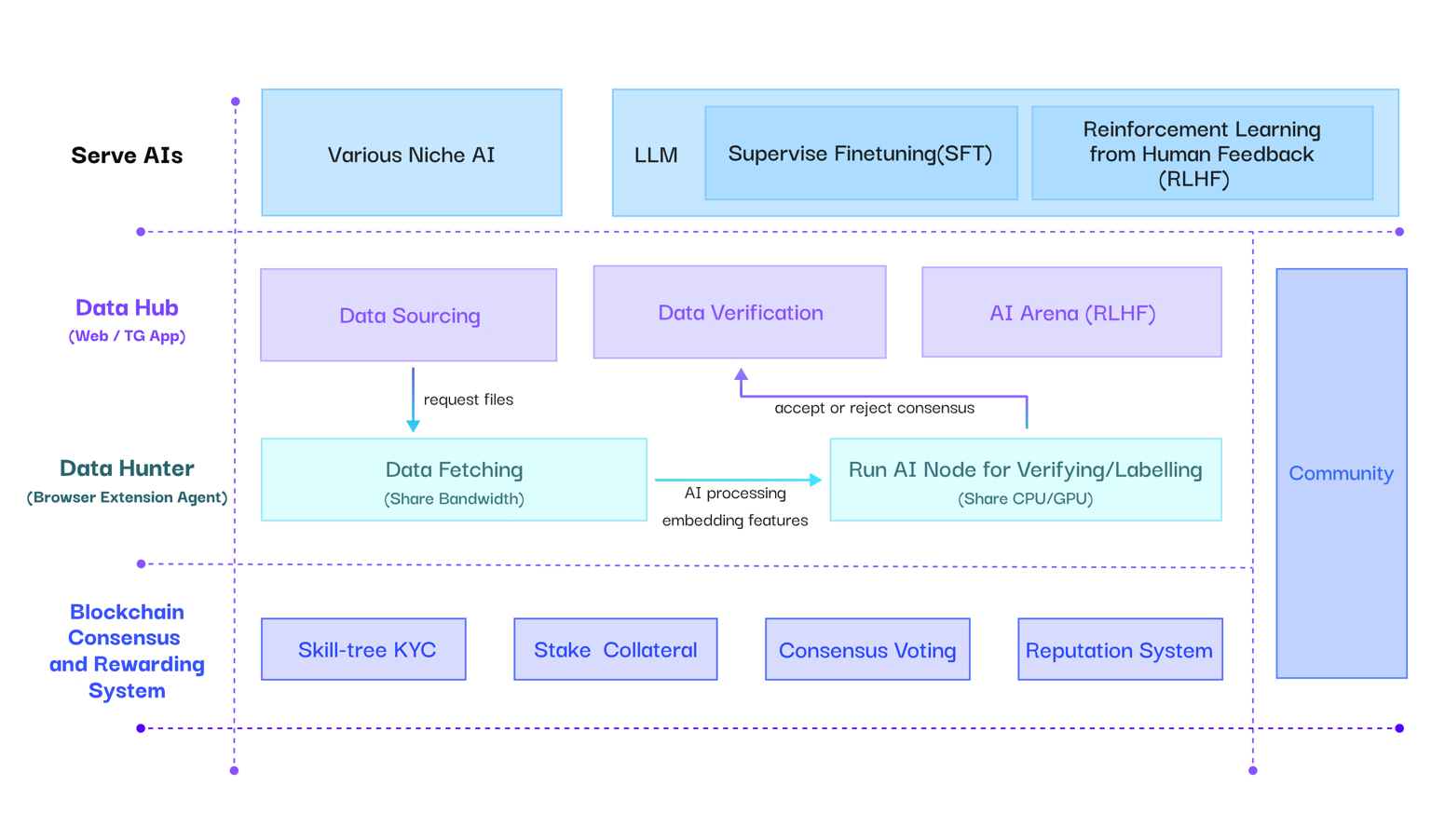

The Three Layers of PublicAI (source: https://docs.publicai.io/publicai-documentation/the-three-layers-of-publicai)

The Workflow of Data Collection:

- Human Uploaders: Contributors upload data to campaigns for rewards.

- Program Checks: Automated checks validate data integrity.

- Human Voters: Community members vote the output of the data checking.

- Incentivize on-chain: The blockchain layer ensures security, transparency, and fairness in managing data contributions and rewards.

The Integration: Building a Trustworthy Data Collecting and Annotating Lifecycle

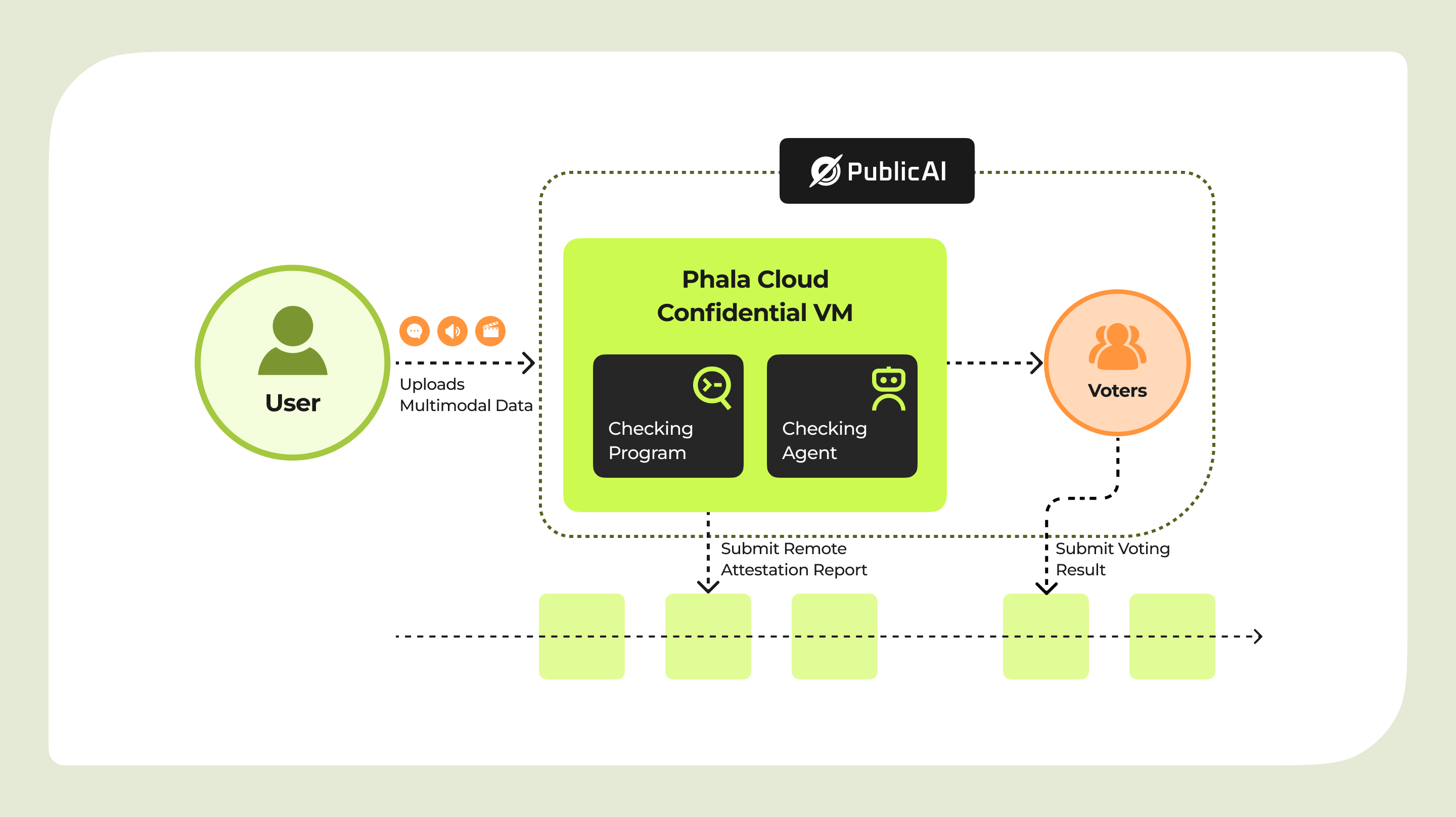

PublicAI’s DataHub platform connects Uploaders and Voters in a collaborative ecosystem. They creates a secure and trustworthy data lifecycle by leveraging TEE infrastructure:

- Tamper-Proof Program Checks: PublicAI's checking program operates securely within Phala’s TEE, ensuring its integrity and preventing tampering. Once deployed in TEE, the program becomes protected against unauthorized modifications, even not possible by Phala and PublicAI. Anyone can verify the integrity through Remote Attestation.

- Privacy-Preserving Data Sharing: All the data would be cryptographically encrypted in Phala Cloud's Confidential Virtual Machine (CVM). Nobody can access data except user themselves and the checking program inside TEE, where user can share their data in a private manner, safeguarded by TEE.

- Autonomous AI Agents in Data Verification: AI agents perform data checks, but their execution can be tampered by node operators. Running AI agents in TEE ensures tamper-proof execution with verifiable attestation.

- Multi-Consensus Verified by Smart Contracts: PublicAI implements a Byzantine Fault Tolerance (BFT) algorithm to verify data quality through decentralized voting. By combine TEE Remote Attestation, the checking program can produce a RA report to smart contract to verify along with the BFT. This approach can introduce another security proof layer that can reduce the cost of single proof system, make the whole consensus more effective.

The Future: Destroying the AI Training Blackbox by Building a Transparent and Verifiable Dataset

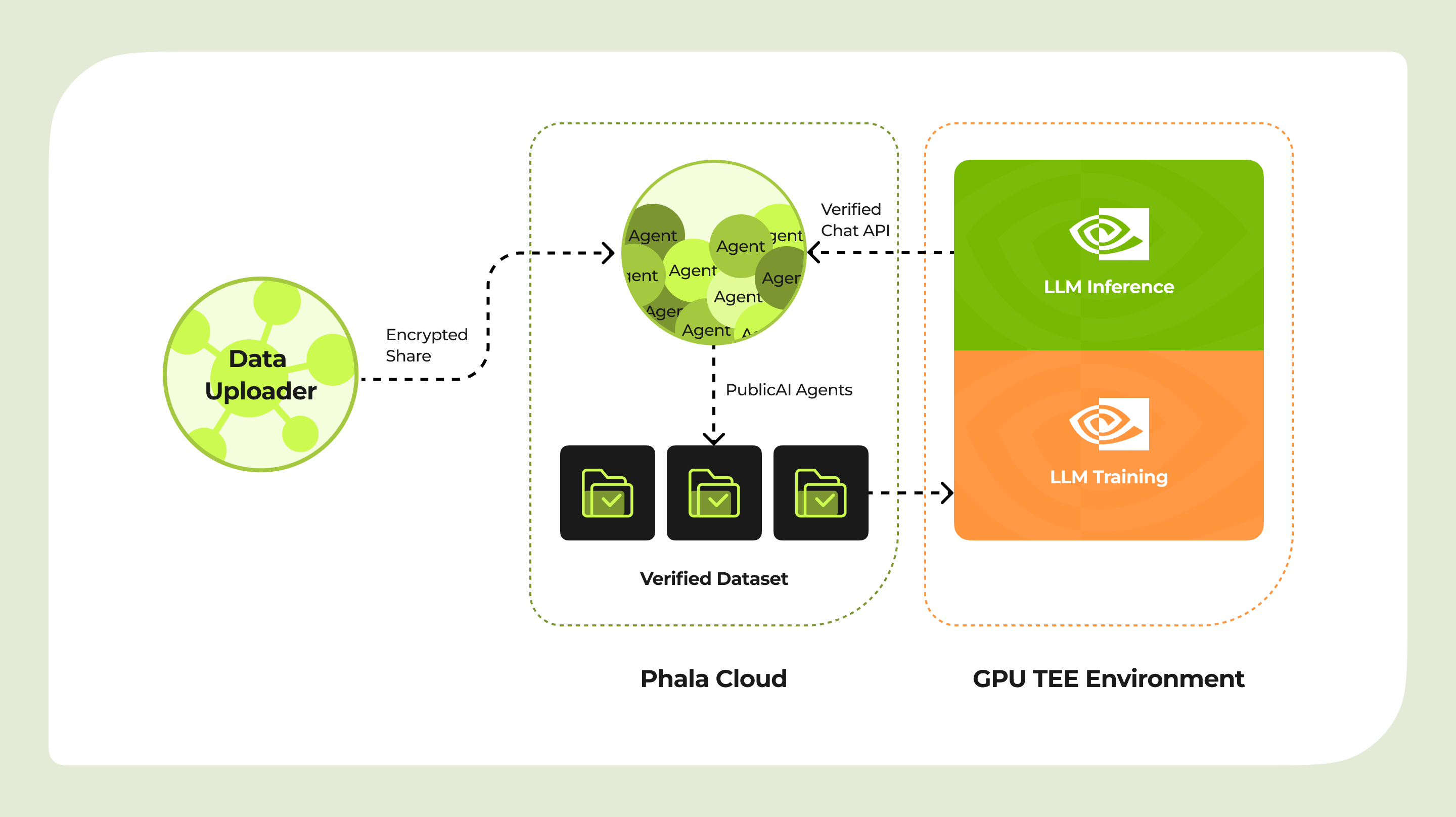

The integration of PublicAI with Phala’s TEE infrastructure establishes a transparent and verifiable lifecycle for AI development, removing the traditional opacity in AI training processes. Each stage of the lifecycle is secured within TEE environments, ensuring integrity and trust.

- Data Verification:

PublicAI’s AI agent, responsible for checking the quality and validity of contributed datasets, operates within Phala’s TEE. This ensures that the data verification process is tamper-proof and trustworthy. The AI agent evaluates the datasets while maintaining the privacy of contributors, making the data layer both secure and reliable.

- AI Model Interaction:

The AI agent instead of calling LLM inferences hosted by web2 companies in backend, it calls the LLM running in Phala's GPU TEE. This allows the AI agent to interact with LLMs securely, ensuring the inference process is transparent and free from external manipulation. GPU TEE enables computationally intensive tasks like LLM development while maintaining the same level of integrity as the data checks.

- Training in TEE:

The verified datasets produced by PublicAI are consumed by the companies that train LLMs directly within Phala’s GPU TEE. By running the training process in a secure and isolated environment, the integrity of the training is guaranteed. This approach ensures that the models are developed using accurate, high-quality datasets without the risk of unauthorized tampering or data leakage.

The Benefits

This lifecycle addresses critical challenges in AI development:

- Transparency: Each stage, from data verification to model training, operates in a verifiable environment, enabling stakeholders to audit the process.

- Integrity: By isolating processes in TEE, the lifecycle ensures that neither the datasets nor the models can be manipulated post-deployment.

- Privacy: Both the contributors’ data and the AI models are shielded from unauthorized access, preserving confidentiality throughout the lifecycle.

Conclusion

Phala and PublicAI’s integration sets a new standard for secure and trustworthy AI development. By combining TEE infrastructure with decentralized data annotation, we address critical challenges in trust, privacy, and transparency.

Explore Phala and PublicAI to contribute to this transformative journey. Together, we can build a scalable, decentralized AI ecosystem that empowers innovation while safeguarding integrity.

References

- PublicAI DataHub: beta.publicai.io

- PublicAI Documentation: docs.publicai.io

- Phala Cloud: cloud.phala.network

- Phala Documentation: docs.phala.network

- Dstack SDK: github.com/Dstack-TEE/dstack

- Private-ML SDK: github.com/nearai/private-ml-sdk